Message boards :

ATLAS application :

Most error that I have encountered

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 28 Nov 09 Posts: 3 Credit: 286,827 RAC: 0 |

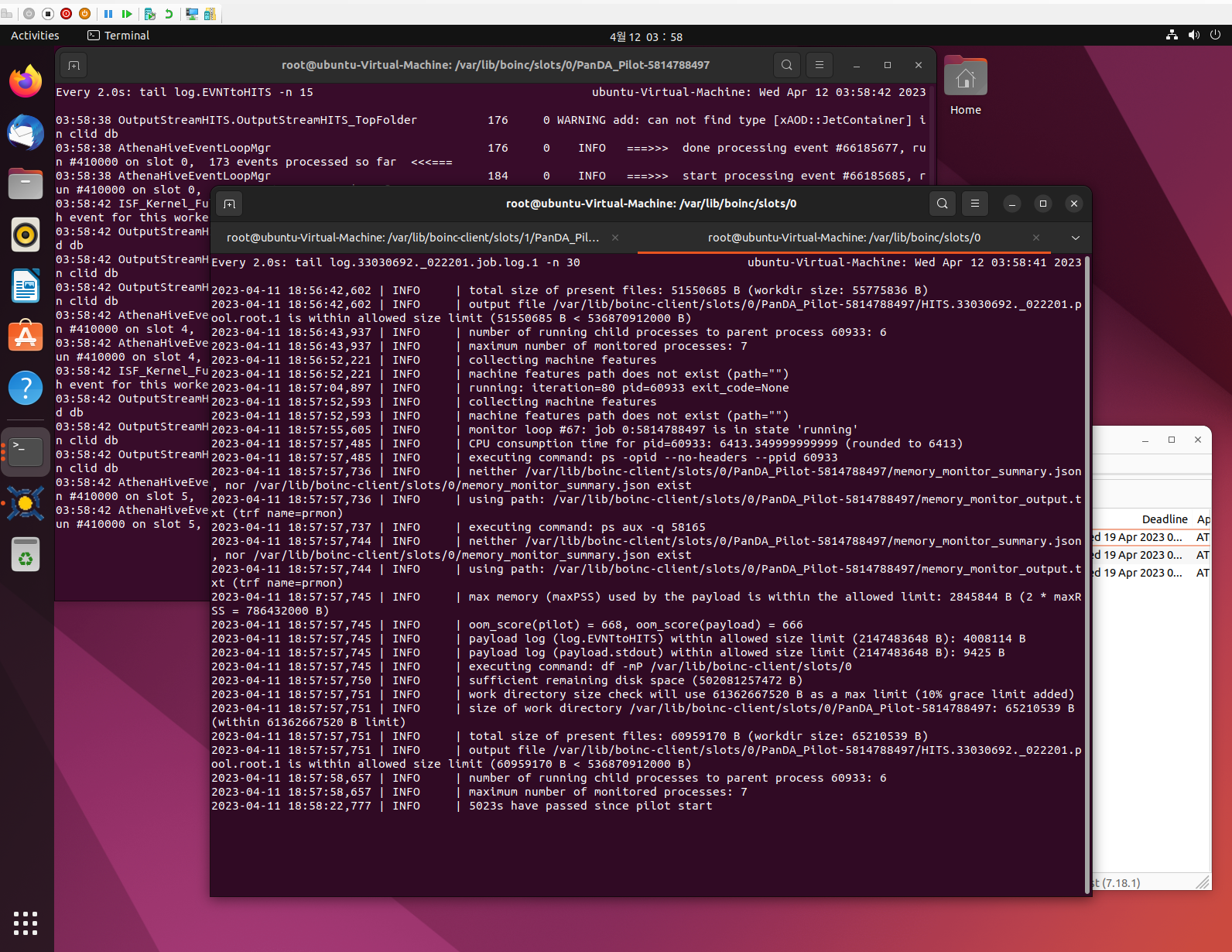

I've dug the rabbit hole and found some problems and a temporary workaround. SSL error with CERN CA Certificate 2023-04-11 17:36:19,442 | WARNING | failed to load data from url=https://atlas-cric.cern.ch/cache/ddmendpoints.json, error: <urlopen error [SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: self signed certificate in certificate chain (_ssl.c:1129)> .. trying to use data from cache=/var/lib/boinc-client/slots/0/agis_ddmendpoints.agis.ALL.json I'm not sure what it is but somehow this slows down the first initial running time for retrying. If you try to visit the site it will throw a warning about certification. CERN CA is not an approved certificate somehow. Based on that, I have added CERN CA (Grid, Root) https://ca.cern.ch/cafiles/ with update-ca-certificates And, still happening. However, it will work by doing wget https://atlas-cric.cern.ch/cache/ddmendpoints.json -O agis_ddmendpoints.agis.ALL.json this is for every slot, modify the job file a little bit. Does anyone know how to solve this more cleanly? (or is this not necessary?) CoolDB connection with IPv6, and WSL Initially, I was trying to do it in WSL2 (and it was almost possible but lack of IPv6 support make it fail). Seeing log.EVNTtoHITS, there was a guaranteed failure, and it was the attempt to connect some COOLDB/TF200? There was a detailed failure log that shows the attempt with an IPv6 address. I tried to disable the IPv6 on Linux wise, assuming there might be an IPv4 address for fallback. But It wasn't and adding to that, I don't have an IPv6 address to connect. Hyper-V with IPv6 I really need my WSL functionality available, even if I use the Vbox version, it cannot be resolved due to the no-IPv6 situation. Fortunately, there is a tunneling solution for IPv4 to IPv6 (6in4). https://handwiki.org/wiki/List_of_IPv6_tunnel_brokers Hyper-V supports IPv6, and performance degradation is fairly tolerable, in my opinion. Setting these up. Watch out for library names. For example, libwxgtk... name has changed to libwxgtk3.0-gtk3-dev since Ubuntu 20. If you want to speed up these stages, add -j (core number) for every make command call. https://lhcathome.cern.ch/lhcathome/forum_thread.php?id=4840 https://lhcathome.cern.ch/lhcathome/forum_thread.php?id=5788 https://cvmfs.readthedocs.io/en/stable/cpt-squid.html https://lhcathome.cern.ch/lhcathome/forum_thread.php?id=4758 I'm not sure whether I cleared all possible errors, however, tasks seem running stable for now. I hope this help someone who really wants to join into LHC@Home.  |

|

Send message Joined: 4 Mar 17 Posts: 32 Credit: 12,140,909 RAC: 8,820 |

https://lhcathomedev.cern.ch/lhcathome-dev/forum_thread.php?id=614&postid=7968 The certificate error was in the test server already. it is something new with the Run 3 tasks since yesterday. https://lhcathome.cern.ch/lhcathome/forum_thread.php?id=5978&postid=47994 I have the same. https://lhcathome.cern.ch/lhcathome/result.php?resultid=391664886 We will see when they fix it. But so far does it not hurt much. Only a bit longer idle time. I was told that will likely run out of Run 2 simulation tasks to run on the prod project very soon, so I have gone ahead and released version 3 there so we can start running Run 3 tasks. Unfortunately I don't think we'll be able to resolve some of the remaining issues like the console monitoring before going live on prod but I think it's better to have something not quite perfect than no tasks at all.https://lhcathomedev.cern.ch/lhcathome-dev/forum_thread.php?id=614&postid=8048 |

|

Send message Joined: 28 Nov 09 Posts: 3 Credit: 286,827 RAC: 0 |

I see. It is a bit awkward to fix by forcing participants to install CERN CA certificates. In that sense, it is understandable to me. As I said above, This can be fixed by adding a CERN CA to the machine and modifying job.xml and something like this.

// ../projects/lhcathome.cern.ch_lhcathome/ATLAS_job_2.54_x86_64-pc-linux-gnu.xml

<job_desc>

<task>

<application>/usr/bin/wget</application>

<command_line>https://atlas-cric.cern.ch/cache/ddmendpoints.json -O agis_ddmendpoints.agis.ALL.json</command_line>

</task>

<task>

<application>run_atlas</application>

<command_line>--nthreads $NTHREADS</command_line>

<checkpoint_filename>boinc_mmap_file</checkpoint_filename>

</task>

</job_desc>

It's a hacky way to fix and it will be reset if the machine restarts. But, yeah. If one wants to optimize idle time, this will work. |

|

Send message Joined: 15 Jun 08 Posts: 2679 Credit: 286,783,424 RAC: 77,077 |

This kind of modification is clearly not recommended. Especially since it "saves" just a few seconds at the beginning of a task until the inner ATLAS script switches to a fallback source. At the end of each task the same situation happens again, although "agis_ddmendpoints.agis.ALL.json" is now present. Beside that the real issue is not the self signed CRIC certificate. Instead its the missing CERN CA certificate the CRIC certificate is checked against. That CA certificate needs to be present inside the ATLAS container as the container doesn't use CA certificates from the main host source. The necessary modifications have to be done by the ATLAS developers at CERN. There are a few possible solutions: 1. Use and distribute an ATLAS container that includes an up to date CERN CA certificate 2. Run a script inside the container that checks for required certificates and gets/updates them from a reliable source, e.g. CVMFS. 3. Don't use CRIC at all. Instead configure the tasks to get the objects from CVMFS. |

|

Send message Joined: 28 Nov 09 Posts: 3 Credit: 286,827 RAC: 0 |

Yep, you are right, and agree with your argument, maybe I should have add precaution or warning. It is minor changes but important step due to the security reasons. Second way seems good way, in my opinion, using the advantage of using VM, make it hard to do the DNS poisoning, keep the security check intact. but, it's up to CERN developers. |

©2025 CERN