Message boards : Number crunching : LHC is NOT using CPU threads above 28

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 12 Jun 18 Posts: 138 Credit: 57,201,256 RAC: 14,515 |

I just checked all my computers and for CPUs with 28 or fewer threads it fills up with LHC WUs. For CPUs with 32, 36, 40 & 44 threads it gets fewer than a 28 thread CPU. E.g., an E5-2699v4 has 22c/44t with 4 GPU WUs. That leaves 40 threads for LHC WUs. I do not use virtualbox so I get WUs for sixtract, native theory & atlas. I'm not running any other CPU project. Yet it's only running 23 WUs and leaving 17 threads idle. I also have 1 CPU max set in preferences. It has 32 GB RAM and is using 17.5 GB now. I have not created an app_config file and my cc_client has <ncpus>-1</ncpus>. Memory is set to 95, 95 & 95% with 100% CPU. Plenty of space left on the SSD. For CPUs with 32 or more threads if LHC plus another CPU project are allowed then the total number of running WUs is limited. If LHC project is suspended then the non-LHC project starts running all the WUs it rightly should. Any idea why so many CPU threads are not being used???

|

|

Send message Joined: 27 Sep 08 Posts: 899 Credit: 771,492,627 RAC: 181,171 |

if you choose no limit for both option then you should get more. However there is next a limit of 50 WU queued at once (excluding SixTrack), however with 32Gb you'll be ram limited quickly. You'll need 64/128Gb to really use the full power of that CPU |

|

Send message Joined: 12 Jun 18 Posts: 138 Credit: 57,201,256 RAC: 14,515 |

This has nothing to do with how many WUs the server sends me. It is about how many WUs a given CPU will run at once. I've never seen a scenario where the native applications used 32 GB RAM.

|

|

Send message Joined: 15 Jun 08 Posts: 2724 Credit: 299,002,782 RAC: 47,790 |

You may dig a bit deeper into your BOINC client. As I mentioned in a related post the server calculates a value for <rsc_memory_bound> for each task it sends to your client. This can be found in your local client_state.xml file in each <workunit> section. ATLAS Example: <rsc_memory_bound>2500000000.000000</rsc_memory_bound> This means (roughly) 2.5 GB. The client now calculates the sum of the current "working set sizes" of all running tasks and adds the <rsc_memory_bound> of the task that should be started next. If this sum exceeds the RAM size you allow your client for use, it will not start a new task. In my example the <rsc_memory_bound> is calculated for a 1-core setup. Unlimited setups use much higher values (IIRC around 10 GB) as an 8-core base is used for the calculation. Hence, a 32 GB host will never start more than 3 of those tasks concurrently. Regardless of how many free cores are available. |

|

Send message Joined: 12 Jun 18 Posts: 138 Credit: 57,201,256 RAC: 14,515 |

That's not my experience. Rig-10 has 20c/40t and is currently running five 8C ATLAS jobs with only 24 GB RAM.

|

Joseph Stateson Joseph StatesonSend message Joined: 10 Aug 08 Posts: 15 Credit: 741,917 RAC: 0 |

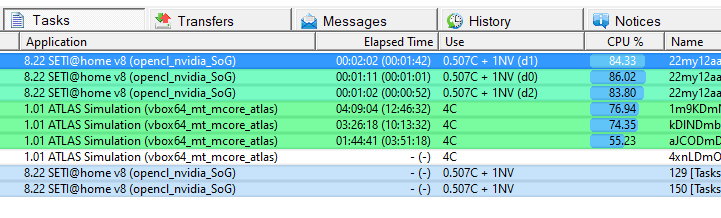

Have been running 3 Atlas of 4 cpu each and noticed that one of them frequently has less CPU usage than the other two (see below) There are 20 threads (10 cores) and 32gb ram. Task Resources show all 20 threads being used but average 75%. The boinc client allocates 3*4 + 3 = 15 so there are 5 thread left over which agrees with my %75 allowance for boinc. Something seems wrong. Typically CPU tasks are %95 or higher. Going to limit jobs to 2 and see if cpu usage increases. If only %71 usage then 1 out of 4 are not being used IMHO. Anyone else see this usage? I assume bonictasks is calculating usage correctly.  |

©2026 CERN