|

61)

Message boards :

ATLAS application :

16, 24 or 32 core ATLAS going to become available?

(Message 33188)

Posted 2 Dec 2017 by marmot Post: Where is the 8core limit? Could be pragmatism although there is an Intel Phi machine (user pomegranate) attempting to run LHC@home now so maybe they'll consider increasing the parallelism. The 8 core limit is in David Cameron's post on current ATLAS WU information. Cameron (Message 29560): Each task can use from 1 to 8 cores according to what is set for "Max # CPUs" in the LHC@Home project preferences. He says it was set because of their efficiency testing and posted the graph of results. Cameron (Message 32802): Above 8 it gets even worse so this is why we set 8 to be the maximum cores. Implying that some algorithmic change will be needed to adapt to the Intel Phi if it looks theoretically possible to make gains with more parallelism. Tried 3x 24's and 3x 16's to test if there were undocumented core counts available and all but one of them failed to start (white screens in VBox manage). The one that started was a 16 which had a string of errors and finally listed it's Virtual Cores as 1..16 with 10, 11 and 12 missing. It made it to the Cern login screen and only used 1% CPU for 2 hours. I'll try an 11 and 12 core job and see if they are still supported but his remarks about efficiency with the current application design means these would probably not be useful. |

|

62)

Message boards :

Number crunching :

VM environment need to be cleaned up.

(Message 33187)

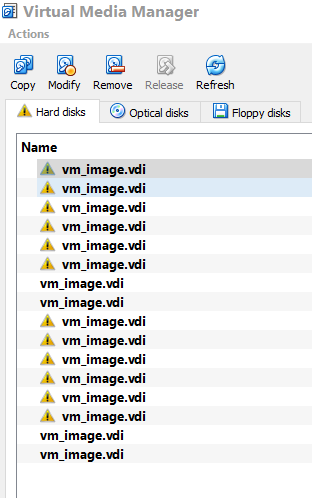

Posted 2 Dec 2017 by marmot Post: Yes Marmot, I have mentioned that several times here but with no reply so I always just check my VB Manager/File/Virtual Media Manager and *remove* them myself. (for a long time now) When the service restarts, almost all of the broken links to vdi's get cleaned up. So, after all VM's are stopped, try using your task/process manager and terminate the VBoxSVC.exe found under services process. Or more elegantly, use the Windows Services management console and stop and restart that service. A batch file with the appropriate net stop VBoxSVC.exe the net start can automate it. No need to restart the computer. I think, if they would run vboxwrapper_xxxxxx at normal priority instead of idle, and when it calls vboxmanage that child process would inherit the normal priority, this issue would mostly clear up. Since the wrapper is not using CPU slices, and it's managing the WU's in an environment where the CPU's are pegging 100% and users are trying to fit as many work units into RAM as possible, the wrapper needs to be dominant to accomplish it's management tasks. |

|

63)

Message boards :

News :

Aborted Work Units

(Message 33186)

Posted 2 Dec 2017 by marmot Post: VirtualBox 5.2 is not 100% compatible with vboxwrapper used by the ATLAS project. You might have something there. My Windows 7 Pro machine was getting (on ATLAS WU's only) a constant stream of "Postponed: VM is unmanageable" errors on 5.2.0 and 5.2.2 so after downgrading to 5.1.30, there have been no more of those errors in 3 days. |

|

64)

Message boards :

Number crunching :

VM environment need to be cleaned up.

(Message 33178)

Posted 30 Nov 2017 by marmot Post: This error in BOINC manager sometimes shows up. Also, probably related since I noticed the job with that message had a collision with an undeleted previous vdi in its claimed slot, eventually hundreds of broken links to vdi files in the BOINC data\slot directories will be in the VBox media manager. VBoxManage.exe fails to complete the deletion of the slot vdi file. No warning or information entry is found in the Windows event logs about VBoxManage.exe or vboxwrapper_xxxxx.exe closing unexpectedly. Raising the priority of VBoxManage.exe to normal from idle seems to have reduced the number of broken links by 90+%. File locked error or timing out before job cleanup? Does vboxwrapper double check that vdi remnants are cleaned up? (Happens on all machines and they have varying versions of VBox 5.1.26, 5.1.28 and 5.2.0) |

|

65)

Message boards :

Number crunching :

Error while computing/too many errors

(Message 33177)

Posted 30 Nov 2017 by marmot Post: This looked like all of my servers VBox media manager lists and those had to be deleted 1 at a time. No multi-selection. Upgrading to the latest VBox didn't help (but that machine seems to save LHC VM's more quickly on suspend now). A solution discovered, in a fit of frustration, was to suspend all WU's (4 at a time), close BOINC and use a process manager to kill the VBoxSVC.exe service. Once the VBox Manager interface is reopened, the environment is cleaned up. All the broken links to the BOINC data\slot *.vdi files are gone without having to delete them all and without having to reboot the machine. The numbers of broken links to slot vdi's (30, 50, 60 per day) appear to be reduced to a few per day by using Process Hacker and forcing VBoxManager.exe to normal priority from the default idle priority in the assumption it was a time-out issue on a computer where all cores are running at maximum. (Seen crypto wallet's and other apps crash regularly until their priority was raised). This could be a coincidence so I'd like to know if it helps anyone else. |

|

66)

Message boards :

ATLAS application :

credits for runtime, not for cputime ?

(Message 33176)

Posted 30 Nov 2017 by marmot Post: Labor is one of the cornerstones for valuation of services/item production and CPU labor(cycles) is what LHC, or any other BOINC project, is receiving. If LHC is using 8 CPU's and only giving credit for 1 CPU when the other 7 could be used by other projects that are also offering credit, then the payment system is predatory. I know the argument can be made that the efficiency in performance means faster throughput and so payment is equivalent but... that's not what my data sets from actual measuring is proving. Single core ATLAS is giving out 1500% to 2000% greater credit per core as the 8 core jobs and even 100% more than the most efficient 4 core. For comparison, the 2 core Theory performed ~10% better than 1 core WU's and the 8 core Theory WU's (while having 33% CPU inefficiencies) still paid only ~15% worse than a 1 core WU. All tests done on the same machine and compared to another identically configured machine with similar results. If the multi-core implementations of Theory are able to pay nearly equivalent credit per CPU core claimed then ATLAS should be able to do the same and the 8 core jobs are an order of magnitude worse in payment per core. I was shocked to see that it's approximately 9x better to run 8 single core ATLAS than to run 3x 8 multicore ATLAS. |

|

67)

Message boards :

ATLAS application :

16, 24 or 32 core ATLAS going to become available?

(Message 33175)

Posted 30 Nov 2017 by marmot Post: There was actually a performance gain at 11 cores comparable to the best gain at 4 cores in her chart. Wonder why they then set the new limit to 8. Maybe that data set is obsolete and the new WU version shows a steady decline in efficiency past 8 cores with no gains at 11. There still is a RAM gain potential by common operating system and OS caching for ATLAS 16, 24 or 32 core if that means it's bundling 2x, 3x or 4x individual multicore WU's as the multi-core Theory application does. 8 core Theory just has 8 slots running 8 disentangled jobs. So 16, 24 or 32 core ATLAS would have 2, 3 or 4 slots for 8 multi-core jobs. |

|

68)

Message boards :

ATLAS application :

16, 24 or 32 core ATLAS going to become available?

(Message 33169)

Posted 30 Nov 2017 by marmot Post: Would be a great RAM saver if they are. |

|

69)

Message boards :

Number crunching :

Checklist Version 3 for Atlas@Home (and other VM-based Projects) on your PC

(Message 33168)

Posted 30 Nov 2017 by marmot Post: To clean up the VM environment you just need to stop the VM service and restart it or kill it in process manager and when it restarts it will cleanup the environment. No need to restart the computer. Real time saver and used this maybe 30 times already. |

|

70)

Message boards :

Number crunching :

Some WU's tagged computation error but logs looks good

(Message 33167)

Posted 30 Nov 2017 by marmot Post: Still getting 2 or 3 of these a day (out of about 60-90 run). They are significant because each is a loss of 40-60,000 CPU cycles each and all the work within the Theory Sims was completed and reported. What does Condor exiting with result N/A mean? |

|

71)

Message boards :

ATLAS application :

Only getting 1 ATLAS WU. "No tasks are available for ATLAS Simulation"

(Message 33062)

Posted 14 Nov 2017 by marmot Post: The BOINC App needs to be in the same folder as the Data folder.NO That is the definition of portable apps. Application and data forks within the same main folder.

Not a portable install. The C:\programmFiles\ folder is on the OS partition (unless you create a hard directory link to another partition) and will be lost when the OS is wiped for reinstall or it won't be transferred when you take the USB external/thumb drive or swappable partition, with data folders, and move it to another computer. https://en.wikipedia.org/wiki/Portable_application |

|

72)

Message boards :

Number crunching :

Suggestions to possibly lower error rates a bit

(Message 33061)

Posted 14 Nov 2017 by marmot Post: *When any LHC@Home task is suspended on the BOINC client, the server should refuse to send any new tasks. It's possible since other BOINC projects I've run have refused new work when a local task is suspended. End user might have issues with particular tasks, a computer problem or high priority work that precludes BOINC tasks and manually suspended tasks to address the issues. LHC@Home is particular to this since trying to suspend 32 tasks at once always ends with aborted VM's instead of saved states and tasks have to be suspended 4 to 8 at a time. *When starting up 16 to 40 LHC@Home tasks from a cold BOINC start, stagger the startups (not sure this is possible within current BOINC client software limits). *If staggering isn't possible then increase the number of retries to get a stable connection to Condor or increase the amount of time the tasks waits before timing out the connection. Make the tasks more stubborn (increase fault tolerance) before giving up the ghost (computation error). Over 70% of my errors occur within the first 20 minutes from the task startup and are mostly communication issues from a data spike on cold BOINC startup or when the ISP resets my modem in the early morning local hours and another spike comes from all the tasks trying to reestablish with Condor. The second greatest error rates are from shutting down without doing any work. Is that because Condor had no work to send down to the VM in that time period? |

|

73)

Message boards :

Number crunching :

Some WU's tagged computation error but logs looks good

(Message 33060)

Posted 14 Nov 2017 by marmot Post: The log appears to show the task ended successfully but gets put into the error category. Can someone else spot what I'm missing about these? There are more than 3 but just linking 3 examples with full text of one: Task 164085532 Task 164109292 Task 164278255: <core_client_version>7.8.3</core_client_version> <![CDATA[ <message> finish file present too long</message> <stderr_txt> 2017-11-13 06:01:52 (14908): Detected: vboxwrapper 26197 2017-11-13 06:01:52 (14908): Detected: BOINC client v7.7 2017-11-13 06:02:08 (14908): Detected: VirtualBox VboxManage Interface (Version: 5.1.22) 2017-11-13 06:02:09 (14908): Detected: Heartbeat check (file: 'heartbeat' every 1200.000000 seconds) 2017-11-13 06:02:09 (14908): Successfully copied 'init_data.xml' to the shared directory. 2017-11-13 06:02:22 (14908): Create VM. (boinc_bca0ac41590654b4, slot#24) 2017-11-13 06:02:29 (14908): Setting Memory Size for VM. (512MB) 2017-11-13 06:02:41 (14908): Setting CPU Count for VM. (1) 2017-11-13 06:02:58 (14908): Setting Chipset Options for VM. 2017-11-13 06:03:18 (14908): Setting Boot Options for VM. 2017-11-13 06:03:20 (14908): Setting Network Configuration for NAT. 2017-11-13 06:03:26 (14908): Enabling VM Network Access. 2017-11-13 06:03:26 (14908): Disabling USB Support for VM. 2017-11-13 06:03:26 (14908): Disabling COM Port Support for VM. 2017-11-13 06:03:27 (14908): Disabling LPT Port Support for VM. 2017-11-13 06:03:27 (14908): Disabling Audio Support for VM. 2017-11-13 06:03:27 (14908): Disabling Clipboard Support for VM. 2017-11-13 06:03:28 (14908): Disabling Drag and Drop Support for VM. 2017-11-13 06:03:28 (14908): Adding storage controller(s) to VM. 2017-11-13 06:03:28 (14908): Adding virtual disk drive to VM. (vm_image.vdi) 2017-11-13 06:03:29 (14908): Adding VirtualBox Guest Additions to VM. 2017-11-13 06:03:29 (14908): Adding network bandwidth throttle group to VM. (Defaulting to 1024GB) 2017-11-13 06:03:29 (14908): forwarding host port 52773 to guest port 80 2017-11-13 06:03:29 (14908): Enabling remote desktop for VM. 2017-11-13 06:03:30 (14908): Enabling shared directory for VM. 2017-11-13 06:03:30 (14908): Starting VM using VBoxManage interface. (boinc_bca0ac41590654b4, slot#24) 2017-11-13 06:03:34 (14908): Successfully started VM. (PID = '6336') 2017-11-13 06:03:34 (14908): Reporting VM Process ID to BOINC. 2017-11-13 06:03:34 (14908): Guest Log: BIOS: VirtualBox 5.1.22 2017-11-13 06:03:34 (14908): Guest Log: BIOS: ata0-0: PCHS=16383/16/63 LCHS=1024/255/63 2017-11-13 06:03:34 (14908): VM state change detected. (old = 'PoweredOff', new = 'Running') 2017-11-13 06:03:34 (14908): Detected: Web Application Enabled (http://localhost:52773) 2017-11-13 06:03:34 (14908): Detected: Remote Desktop Enabled (localhost:52778) 2017-11-13 06:03:34 (14908): Preference change detected 2017-11-13 06:03:34 (14908): Setting CPU throttle for VM. (100%) 2017-11-13 06:03:34 (14908): Setting checkpoint interval to 600 seconds. (Higher value of (Preference: 60 seconds) or (Vbox_job.xml: 600 seconds)) 2017-11-13 06:03:36 (14908): Guest Log: BIOS: Boot : bseqnr=1, bootseq=0032 2017-11-13 06:03:36 (14908): Guest Log: BIOS: Booting from Hard Disk... 2017-11-13 06:03:38 (14908): Guest Log: BIOS: KBD: unsupported int 16h function 03 2017-11-13 06:03:38 (14908): Guest Log: BIOS: AX=0305 BX=0000 CX=0000 DX=0000 2017-11-13 06:04:13 (14908): Guest Log: vboxguest: misc device minor 56, IRQ 20, I/O port d020, MMIO at 00000000f0400000 (size 0x400000) 2017-11-13 06:04:33 (14908): Guest Log: VBoxService 4.3.28 r100309 (verbosity: 0) linux.amd64 (May 13 2015 17:11:31) release log 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000137 main Log opened 2017-11-13T12:04:33.097548000Z 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000444 main OS Product: Linux 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000551 main OS Release: 4.1.39-28.cernvm.x86_64 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000613 main OS Version: #1 SMP Tue Mar 14 08:13:19 CET 2017 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000670 main OS Service Pack: #1 SMP Tue Mar 14 08:13:19 CET 2017 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000727 main Executable: /usr/sbin/VBoxService 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000729 main Process ID: 2719 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.000730 main Package type: LINUX_64BITS_GENERIC 2017-11-13 06:04:33 (14908): Guest Log: 00:00:00.009969 main 4.3.28 r100309 started. Verbose level = 0 2017-11-13 06:05:44 (14908): Guest Log: [INFO] Mounting the shared directory 2017-11-13 06:05:44 (14908): Guest Log: [INFO] Shared directory mounted, enabling vboxmonitor 2017-11-13 06:05:45 (14908): Guest Log: [DEBUG] Testing network connection to cern.ch on port 80 2017-11-13 06:05:45 (14908): Guest Log: [DEBUG] Connection to cern.ch 80 port [tcp/http] succeeded! 2017-11-13 06:05:45 (14908): Guest Log: [DEBUG] 0 2017-11-13 06:05:45 (14908): Guest Log: [DEBUG] Testing CVMFS connection to lhchomeproxy.cern.ch on port 3125 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] Connection to lhchomeproxy.cern.ch 3125 port [tcp/a13-an] succeeded! 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] 0 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] Testing VCCS connection to vccs.cern.ch on port 443 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] Connection to vccs.cern.ch 443 port [tcp/https] succeeded! 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] 0 2017-11-13 06:05:46 (14908): Guest Log: [DEBUG] Testing connection to Condor server on port 9618 2017-11-13 06:05:47 (14908): Guest Log: [DEBUG] Connection to vccondor01.cern.ch 9618 port [tcp/condor] succeeded! 2017-11-13 06:05:47 (14908): Guest Log: [DEBUG] 0 2017-11-13 06:05:47 (14908): Guest Log: [DEBUG] Probing CVMFS ... 2017-11-13 06:05:48 (14908): Guest Log: Probing /cvmfs/grid.cern.ch... OK 2017-11-13 06:06:24 (14908): Guest Log: Probing /cvmfs/sft.cern.ch... OK 2017-11-13 06:06:25 (14908): Guest Log: VERSION PID UPTIME(M) MEM(K) REVISION EXPIRES(M) NOCATALOGS CACHEUSE(K) CACHEMAX(K) NOFDUSE NOFDMAX NOIOERR NOOPEN HITRATE(%) RX(K) SPEED(K/S) HOST PROXY ONLINE 2017-11-13 06:06:25 (14908): Guest Log: 2.2.0.0 3423 1 19600 5072 14 1 481687 10240001 2 65024 0 20 95 20792 0 http://cvmfs.fnal.gov/cvmfs/grid.cern.ch http://131.225.205.134:3125 1 2017-11-13 06:06:34 (14908): Guest Log: [INFO] Reading volunteer information 2017-11-13 06:06:34 (14908): Guest Log: [INFO] Volunteer: marmot (373413) Host: 10506240 2017-11-13 06:06:34 (14908): Guest Log: [INFO] VMID: af6a5502-bdf8-44f3-94c4-46386c9eddf5 2017-11-13 06:06:34 (14908): Guest Log: [INFO] Requesting an X509 credential from LHC@home 2017-11-13 06:06:38 (14908): Guest Log: [INFO] Running the fast benchmark. 2017-11-13 06:09:05 (14908): Guest Log: [INFO] Machine performance 4.08 HEPSEC06 2017-11-13 06:09:05 (14908): Guest Log: [INFO] Theory application starting. Check log files. 2017-11-13 06:09:05 (14908): Guest Log: [DEBUG] HTCondor ping 2017-11-13 06:09:05 (14908): Guest Log: [DEBUG] 0 2017-11-13 06:10:27 (14908): Guest Log: [INFO] New Job Starting in slot1 2017-11-13 06:10:27 (14908): Guest Log: [INFO] Condor JobID: 1415.80 in slot1 2017-11-13 06:10:32 (14908): Guest Log: [INFO] MCPlots JobID: 39453928 in slot1 2017-11-13 06:57:14 (14908): Preference change detected 2017-11-13 06:57:14 (14908): Setting CPU throttle for VM. (100%) 2017-11-13 06:57:48 (14908): Setting checkpoint interval to 600 seconds. (Higher value of (Preference: 60 seconds) or (Vbox_job.xml: 600 seconds)) 2017-11-13 07:33:02 (14908): Preference change detected 2017-11-13 07:33:02 (14908): Setting CPU throttle for VM. (100%) 2017-11-13 07:33:34 (14908): Setting checkpoint interval to 600 seconds. (Higher value of (Preference: 60 seconds) or (Vbox_job.xml: 600 seconds)) 2017-11-13 07:34:58 (14908): Preference change detected 2017-11-13 07:34:58 (14908): Setting CPU throttle for VM. (100%) 2017-11-13 07:37:48 (14908): Setting checkpoint interval to 600 seconds. (Higher value of (Preference: 60 seconds) or (Vbox_job.xml: 600 seconds)) 2017-11-13 08:19:44 (14908): Status Report: Job Duration: '64800.000000' 2017-11-13 08:19:44 (14908): Status Report: Elapsed Time: '6013.543446' 2017-11-13 08:19:44 (14908): Status Report: CPU Time: '7182.894444' 2017-11-13 08:45:25 (14908): Guest Log: [INFO] Job finished in slot1 with 0. 2017-11-13 08:47:18 (14908): Guest Log: [INFO] New Job Starting in slot1 2017-11-13 08:47:18 (14908): Guest Log: [INFO] Condor JobID: 1440.79 in slot1 2017-11-13 08:47:24 (14908): Guest Log: [INFO] MCPlots JobID: 39457362 in slot1 2017-11-13 10:46:37 (14908): Status Report: Job Duration: '64800.000000' 2017-11-13 10:46:37 (14908): Status Report: Elapsed Time: '12013.869999' 2017-11-13 10:46:37 (14908): Status Report: CPU Time: '15124.296950' 2017-11-13 11:05:12 (14908): Guest Log: [INFO] Job finished in slot1 with 0. 2017-11-13 11:05:36 (14908): Guest Log: [INFO] New Job Starting in slot1 2017-11-13 11:05:36 (14908): Guest Log: [INFO] Condor JobID: 1453.150 in slot1 2017-11-13 11:05:36 (14908): Guest Log: [INFO] MCPlots JobID: 39459068 in slot1 2017-11-13 11:51:12 (14908): Guest Log: [INFO] Job finished in slot1 with 0. 2017-11-13 11:51:24 (14908): Guest Log: [INFO] New Job Starting in slot1 2017-11-13 11:51:24 (14908): Guest Log: [INFO] Condor JobID: 1470.85 in slot1 2017-11-13 11:51:29 (14908): Guest Log: [INFO] MCPlots JobID: 39461524 in slot1 2017-11-13 13:01:15 (14908): Status Report: Job Duration: '64800.000000' 2017-11-13 13:01:15 (14908): Status Report: Elapsed Time: '18013.902668' 2017-11-13 13:01:15 (14908): Status Report: CPU Time: '22535.639258' 2017-11-13 15:18:15 (14908): Status Report: Job Duration: '64800.000000' 2017-11-13 15:18:15 (14908): Status Report: Elapsed Time: '24014.540192' 2017-11-13 15:18:15 (14908): Status Report: CPU Time: '30256.066348' 2017-11-13 15:48:49 (14908): Guest Log: [INFO] Job finished in slot1 with 0. 2017-11-13 15:48:51 (14908): Guest Log: [INFO] New Job Starting in slot1 2017-11-13 15:48:51 (14908): Guest Log: [INFO] Condor JobID: 1503.1125 in slot1 2017-11-13 15:48:56 (14908): Guest Log: [INFO] MCPlots JobID: 39466625 in slot1 2017-11-13 18:03:54 (14908): Status Report: Job Duration: '64800.000000' 2017-11-13 18:03:54 (14908): Status Report: Elapsed Time: '30014.842810' 2017-11-13 18:03:54 (14908): Status Report: CPU Time: '38337.120949' 2017-11-13 18:14:54 (14908): Guest Log: [INFO] Job finished in slot1 with 0. 2017-11-13 18:25:23 (14908): Guest Log: [INFO] Condor exited with return value N/A. 2017-11-13 18:25:23 (14908): Guest Log: [INFO] Shutting Down. 2017-11-13 18:25:23 (14908): VM Completion File Detected. 2017-11-13 18:25:23 (14908): VM Completion Message: Condor exited with return value N/A. . 2017-11-13 18:25:23 (14908): Powering off VM. 2017-11-13 18:30:46 (14908): VM did not power off when requested. 2017-11-13 18:30:46 (14908): VM was successfully terminated. 2017-11-13 18:30:46 (14908): Deregistering VM. (boinc_bca0ac41590654b4, slot#24) 2017-11-13 18:30:46 (14908): Removing network bandwidth throttle group from VM. 2017-11-13 18:30:47 (14908): Removing storage controller(s) from VM. 2017-11-13 18:30:47 (14908): Removing VM from VirtualBox. 2017-11-13 18:30:47 (14908): Removing virtual disk drive from VirtualBox. 18:30:53 (14908): called boinc_finish(0) </stderr_txt> ]]> |

|

74)

Message boards :

ATLAS application :

Only getting 1 ATLAS WU. "No tasks are available for ATLAS Simulation"

(Message 33050)

Posted 12 Nov 2017 by marmot Post: I've tried that option and it's not as flexible and a double install with separate WUprops tracking and separate cc_config.xml files. Each installation is on the drive with its data and can be moved to another computer and is isolated from drive failure. Single install is less portable. You are saying a single install of BOINC can handle multiple queues and project lists? How, and what is the advantage when not using remote administration? Three BOINC icons on the task bar takes you straight to each of the three installs and opens up it's management interface. There's no messing around with switches on startup icon. I can get a triple BOINC install setup in the amount if time it takes to copy the BOINC folder and is much easier than setting up a single new install and then messing around with configurations. Setup speed and ease of maintenance are crucial. Your solution is not portable. The BOINC App needs to be in the same folder as the Data folder. (D:\BOINC\APP and D:\BOINC\DATA) so that the entire install can be ported to another partition and each data folder can be moved with its app independently to a different computer. That would be D:\BOINC\APP and D:\BOINC\BUNKER\1 You don't need to reinstall BOINC. You just need to copy D:\BOINC to a new computer with it's projects and app_config.xml intact and allow a new crossID to be created once the new computer requests work. |

|

75)

Message boards :

Number crunching :

I am sent just ATLAS tasks

(Message 33049)

Posted 12 Nov 2017 by marmot Post: Can you connect to vccondorce02.cern.ch on port 9618 from your Windows machine? If you open the following link in your browser you should get a connection reset error In the router QoS feature, I set VCCondor IP address (128.142.142.167:9618 maybe there is another IP but it's not in my internet session list) TCP, connecting to any of the BOINC machines internal IP's, to lowest priority so that any other initial handshaking packets get priority over the other (30-90) WU's currently communicating to 128.142.142.167:9618. Also BOINC and other traffic gets priority. That was 3 hours ago and all the errors have stopped, so far, but the real test will be one of my servers cold starting up 32 Theory WU's. That always ends in a communication disaster and the newest machine errored out 97 or more Theory WU's before it finally reached equilibrium. It would be nice if the retry count or polling period for the initial handshaking communication with Condor was a bit higher/longer for cold starting a server.... |

|

76)

Message boards :

ATLAS application :

Only getting 1 ATLAS WU. "No tasks are available for ATLAS Simulation"

(Message 33026)

Posted 8 Nov 2017 by marmot Post: ...Gave up on ATLAS for a while until I can find time to double install BOINC. I've tried that option and it's not as flexible and a double install with separate WUprops tracking and separate cc_config.xml files. Each installation is on the drive with its data and can be moved to another computer and is isolated from drive failure. Single install is less portable. I would run 32 core ATLAS tasks if that were an option. Only remember a few German words from my grandmother. |

|

77)

Message boards :

Number crunching :

I am sent just ATLAS tasks

(Message 33025)

Posted 8 Nov 2017 by marmot Post: I am running only Atlas tasks (not native) on my 2 Linux boxes, with SuSE Leap 42.2 and 42.3. I am running SETI@home, SETI Beta and Einstein@home, both CPU and GPU on my fastest machine, a Windows 10 PC with a GTX 1050 Ti graphic board. If there were an option to run a 32 core ATLAS then my servers would run ATLAS only but the maximum is 24 core and that was getting odd crashes on the test machine. Not enough RAM (not spending $100's more on RAM for just this project, other projects are fine) for 2x 16 core ATLAS so the machines would have to run a mix of ATLAS and Theory but the server won't distribute that properly. And back to the initial solution I posted above if you want to run a mix of Theory, CMS, LHCb and ATLAS. Multiple BOINC installs. |

|

78)

Message boards :

Number crunching :

I am sent just ATLAS tasks

(Message 33023)

Posted 8 Nov 2017 by marmot Post: Every Project is special. Here is some of the semantic confusion when discussing things on this particular forum. LHC@Home is a BOINC project ATLAS is a LHC@Home Work Unit, it is NOT a BOINC project any longer. Once ATLAS was merged from it's own BOINC server it became a work unit within the LHC@Home project. Same for Theory and CMS. They are work units and not BOINC projects. Of course they are projects in the sense of funding and work staff but that confuses the discussion when trying to troubleshoot issues on the LHC@Home BOINC project 'number crunching' forums. |

|

79)

Message boards :

CMS Application :

CMS Tasks Failing

(Message 32963)

Posted 2 Nov 2017 by marmot Post: Well, the glib answer is that there's a connectivity problem somewhere. :-/ My machines (in Missouri) were unable to process Theory for 3 hours from 14:33 UTC - 17:26 UTC Nov 1. Some of the WU's took over 2 hours before they failed. 60 WU's across 3 machines just stopped processing and all but 10 ended in the same variety of ping errors Erich56 listed above. I was going to say that maybe this is DB lock issue from the other thread https://lhcathome.cern.ch/lhcathome/forum_thread.php?id=4496&postid=32952#32952 is related but that would mean Erich56's WU's would need to have waited to fail from 14:30 UTC till 20 UTC (from Erich56's posted log). Is that a possibility? @Erich56, did you notice if the WU's that continued to be in RAM were actually using CPU cycles? |

|

80)

Message boards :

Number crunching :

I am sent just ATLAS tasks

(Message 32962)

Posted 2 Nov 2017 by marmot Post: Why this way to use LHC Marmot. No other projects of the 35 I've tried force a user to limit their machine to a single version of the projects' work units. All default to every work unit except test units. Using only ATLAS WU's leads to too much time where the CPU's lie idle in the startup phase since RAM size limits number of WU's. Idle time is lost work, so Theory needs to be running at the same time to assure me that there is NEVER a wasted CPU cycle. Also, not every computer will have the RAM to run more than 1 ATLAS and so the other cores need to run Theory, Six-track or some other BOINC project or we just aren't getting the best use of our BOINC machines. If people want to run multiple versions of work from LHC@Home at a time, why prevent or discourage it? |

Previous 20 · Next 20

©2024 CERN