Message boards :

ATLAS application :

Uploads of finished tasks not possible since last night

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · Next

| Author | Message |

|---|---|

|

Send message Joined: 5 Nov 15 Posts: 144 Credit: 6,301,268 RAC: 0 |

Aborting the upload and then clicking update for the project would clear the upload slot and lead to a a successful upload on other projects where a server glitch or maintenance cycle happened, but here the WU gets a failed upload error and is wasted. Can't this be prevented as the data set is completed and safely on the client drives? Why should a successfully completed data set be lost (4 entire CPU days per each WU on my machines) because the upload slot was cleared and retried at a later time? |

|

Send message Joined: 18 Dec 15 Posts: 1934 Credit: 155,357,141 RAC: 124,112 |

David Cameron wrote this morning at 8:40hrs UTC: The upload server got completely full so I'm cleaning it now - there is an automatic cleaning tool but it wasn't cleaning enough to handle the huge volumes of data we got this week.did anyone have an upload of a finished ATLAS task during the course of this day? I sat at the PC for lengthy time periods and pushed the "retry now" button several hundert times, all day long - with no effect. Waste of time. Not a single one of the numerous finished tasks got uploaded. I suspect that it all ended up in a mess, and that finally we can delete all our finished tasks, as they will have reached their deadlines before upload. |

|

Send message Joined: 19 Feb 08 Posts: 708 Credit: 4,336,250 RAC: 0 |

I have one ATLAS task trying to upload on a Linux box and 2 on a Windows PC. The Linux task has a 20 December deadline, the Windows 22 December. Since ATLAS and SixTrack tasks are the only ones not failing on my PCs I am not going to delete them. Tullio |

|

Send message Joined: 12 Feb 14 Posts: 72 Credit: 4,639,155 RAC: 0 |

I have uploaded two of three results that were stuck, but one of them is still stuck with a file locked by file_upload_handler PID=-1 error: 12/16/2017 1:43:11 PM | LHC@home | Started upload of tKSLDmWh3irnDDn7oo6G73TpABFKDmABFKDm9BLKDmABFKDmF5OqMn_1_r815870200_ATLAS_result 12/16/2017 1:43:16 PM | LHC@home | [error] Error reported by file upload server: [tKSLDmWh3irnDDn7oo6G73TpABFKDmABFKDm9BLKDmABFKDmF5OqMn_1_r815870200_ATLAS_result] locked by file_upload_handler PID=-1 12/16/2017 1:43:16 PM | LHC@home | Temporarily failed upload of tKSLDmWh3irnDDn7oo6G73TpABFKDmABFKDm9BLKDmABFKDmF5OqMn_1_r815870200_ATLAS_result: transient upload error 12/16/2017 1:43:16 PM | LHC@home | Backing off 03:39:17 on upload of tKSLDmWh3irnDDn7oo6G73TpABFKDmABFKDm9BLKDmABFKDmF5OqMn_1_r815870200_ATLAS_result |

|

Send message Joined: 10 Nov 17 Posts: 6 Credit: 213,871 RAC: 0 |

Since last night, uploads of finished tasks get stuck in "backoff" Status. Same problem since few days. The upload speed and the progres bar stays on 0.000%(KB) only the upload time is running but stops after few minutes and a message apears 'restarting in ....'. (Mostly over 4h !) One Simulation do this since 2 days and in the 'Active tasks' window is 'upload in progres' even in case of 0 progres! |

|

Send message Joined: 10 Nov 17 Posts: 6 Credit: 213,871 RAC: 0 |

Finished tasks yes but upload fails allways. |

|

Send message Joined: 18 Dec 15 Posts: 1934 Credit: 155,357,141 RAC: 124,112 |

Short time ago, several of my finished tasks were finally uploaded, which I am very pleased about, of course! However, 2 "old" ones (i.e. such ones which were started before the server crashed) did NOT upload. The deadlines for those are tomorrow and day after tomorrow. Any chance that they will be uploaded in time? |

|

Send message Joined: 13 May 14 Posts: 387 Credit: 15,314,184 RAC: 0 |

Here is my interpretation of what is happening: - the upload server is overloaded, so many uploads fail leaving a half-complete file - the retries fail because the half-complete file is still there (the "locked by file_upload_handler PID=-1" error) - our cleaning of incomplete files runs only once per day so there is no possibliity of retries succeeding until one day has passed - the server getting full last night was yet another problem but this is now fixed I have changed the cleaning to run once every 6 hours and delete files older than 6 hours to make it more aggressive. But if you have a failed upload you'll still have to wait some time before it will work, so clicking retry every few minutes won't help. In addition I've limited submission to keep only 10,000 WU in the server, so there won't be any new WU until the upload backlog clears and the number of running goes under 10,000. If it looks like many WU will go over the deadline we can look at how to extend the deadline, although I don't know an easy way to do that right now. |

|

Send message Joined: 18 Dec 15 Posts: 1934 Credit: 155,357,141 RAC: 124,112 |

Thanks, David, for the thorough explanations, and also for your efforts to straighten things out. So we'll see what happens during the next few days :-) |

|

Send message Joined: 12 Feb 14 Posts: 72 Credit: 4,639,155 RAC: 0 |

I think that I have seen other BOINC projects have upload handlers that either automatically start over on failed file uploads, or direct the BOINC client to start the upload at the point where the interruption occurred. Could this project be programmed to do either of these? |

|

Send message Joined: 6 Jul 17 Posts: 22 Credit: 29,430,354 RAC: 0 |

Thanks David for your work at weekend. Some uploads finished at night some still suck in upload 100% but not finished. In my opinion the bottleneck is the part between upload reached 100% and sending the 'upload OK and Task closed' to the Boinc client. Don't know how server side Boinc works (perhaps copying the temp-upload file) but it look like the Boinc client will run in a timeout and set upload to faulty which result in a complete new upload. If this happens at many clients you'll get a huge amount of upload load. So if there is a timeout value in Boinc client, doubleling this value would help projects with big upload file size. |

|

Send message Joined: 12 Feb 14 Posts: 72 Credit: 4,639,155 RAC: 0 |

I have finally been able to upload and report my ATLAS@home task. |

|

Send message Joined: 16 Sep 17 Posts: 100 Credit: 1,618,469 RAC: 0 |

I could be mistaken, but I think some WUs simply jumped to 100% when the upload failed at x% amount. I.e. the last percentage I saw was 63%, suddenly 100% and next failed to retry. The task could not have been completed at the current speed (50kbps) and in that short amount of time. What I am trying to say is, the numbers you see could be misleading you to think the database is the issue even though it is not. |

|

Send message Joined: 24 Jul 16 Posts: 88 Credit: 239,917 RAC: 0 |

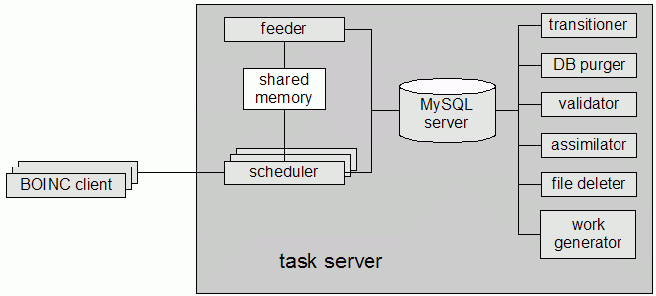

@ csbyseti Don't know how server side Boinc works It 's true this part of the boinc project is not clearly described (at least the general guidelines should appear somewhere to explain to volunteers what means the terms encountered in the status server project page) so i found a short summary on the net :  Taskserver (or scheduling server) in details: The scheduler handles requests from BOINC clients The feeder caches jobs which are not yet transmitted The transitioner examines jobs for which a state change has occurred and handles this change The database purger removes jobs and instance database entries that are no longer needed The validator compares the instances of a work unit The assimilator handles tasks which are done The file deleter deletes input and output files that are no longer needed The work generator creates new jobs and their input files Unfortunately ,the only component missing in this picture is the the file upload handler which is not linked to database storage but i found a picture on the net (slides n° 5) where its functioning is explained (slides n° 22 - 24). Server directory structure The directory structure for a typical BOINC project looks like: PROJECT/

bin/ cgi-bin/ log_HOSTNAME/ pid_HOSTNAME/ download/ html/ inc/ ops/ project/ stats/ user/ user_profile/ keys/ upload/

bin: server daemons and programs. cgi-bin: CGI programs log_HOSTNAME: log output pid_HOSTNAME: lock files, pid files download: storage for data server downloads. html: PHP files for public and private web interfaces keys: encryption keys upload: storage for data server uploads.

|

|

Send message Joined: 15 Nov 14 Posts: 602 Credit: 24,371,321 RAC: 0 |

Good News: My first three native ATLAS on my two Ubuntu machines ran properly and completed without error. All three of my native ATLAS have now uploaded automatically in the last day. But I hope someone will give us the "all clear" when things are back to normal. I don't want to get any more until then, though that may not be until January. |

|

Send message Joined: 2 May 07 Posts: 2278 Credit: 178,775,457 RAC: 11 |

Atlas doesn't upload finished tasks. There is a problem with upload-Server at the moment, see sixtrack-threads. |

|

Send message Joined: 28 Sep 04 Posts: 790 Credit: 63,272,445 RAC: 25,754 |

Atlas doesn't upload finished tasks. So what is the 110 MB file Atlas is trying to upload? The file I have has been stuck for 16 hours.

|

|

Send message Joined: 2 May 07 Posts: 2278 Credit: 178,775,457 RAC: 11 |

One of my 5 ATLAS was uploaded a few moments ago. We have to wait...... and we are hopeful. |

|

Send message Joined: 28 Sep 04 Posts: 790 Credit: 63,272,445 RAC: 25,754 |

The one that is stuck has retried now 10 times. Last retry went to 100% but still failed with transient HTTP error. I have uploaded successfully today 3 tasks on two different hosts.

|

|

Send message Joined: 16 May 08 Posts: 4 Credit: 1,320,525 RAC: 0 |

I have now also 2 WUs not uploading. Sa 23 Dez 2017 15:07:34 CET | LHC@home | [error] Error reported by file upload server: [wpaMDmkmnjrnSu7Ccp2YYBZmABFKDmABFKDmO0IKDmABFKDmioHCBn_1_r210029558_ATLAS_result] locked by file_upload_handler PID=-1 Sa 23 Dez 2017 15:07:34 CET | LHC@home | Temporarily failed upload of wpaMDmkmnjrnSu7Ccp2YYBZmABFKDmABFKDmO0IKDmABFKDmioHCBn_1_r210029558_ATLAS_result: transient upload error Sa 23 Dez 2017 15:07:34 CET | LHC@home | Backing off 01:19:18 on upload of wpaMDmkmnjrnSu7Ccp2YYBZmABFKDmABFKDmO0IKDmABFKDmioHCBn_1_r210029558_ATLAS_result |

©2026 CERN