Message boards : ATLAS application : Information on ATLAS tasks

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 13 May 14 Posts: 387 Credit: 15,314,184 RAC: 0 |

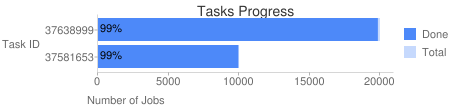

This thread is for providing up to date information on the tasks ATLAS is running. It is designed as a kind of news feed and not a discussion thread so please do not post comments here. As a reminder, in the case of problems running ATLAS tasks, please go through Yeti's checklist ATLAS tasks run simulations of protons colliding inside the ATLAS detector at the LHC. The input file which starts with EVNT is a collection of "events". Each event represents a simulated collision of protons inside the ATLAS detector and in this file are descriptions of particles which are produced by these collisions. The chance of a certain particle (eg Higgs boson) being produced in a collision has a certain probability so these events are randomly generated according to these probabilities. What the ATLAS WU do is simulate how those particles in each event interact with the detector, which consists of many extremely complex components. The description of the detector is partly in ATLAS simulation software but partly in remote database services from which data is read over the network at the start of each WU. The output of the simulation is in the HITS file, which is a description of where each particle "hits" (i.e interacts with) the detector. Therefore a truly successful WU must have a valid HITS file produced, however you can still get credit even if no HITS file is present because we don't want people to suffer from problems in ATLAS software or infrastructure. At the moment each task simulates 200 events, but due to the mixture of different tasks simulating different kinds of physics processes the length of each task can vary. As described in this thread you can monitor the progress of the task through the VirtualBox consoles. When we have new types of tasks or different numbers of events we will post updated in this thread. The progress of current batches of tasks are shown here (the image is refreshed every 15 minutes):  Each task can use from 1 to 8 cores according to what is set for "Max # CPUs" in the LHC@Home project preferences. By default this is 1, so if you want to run multiple core tasks you should change this setting. You can also change the number of cores to use and other setting by using an app_config.xml file (recommended for experienced volunteers only). The events to process will be split among the available cores, so normally each core processes (no events in task) / (no of cores). The processes share memory which means multicore tasks use less total memory than running the same number of single core tasks. The memory allocated to the virtual machine is calculated based on the number of cores following the formula: 3GB + 0.9GB * ncores. It is recommended to have more than 4GB memory available to run ATLAS tasks. Even single-core tasks are not practical to run with 4GB or less. Console 3 shows the processes currently running. A healthy WU (after the initialisation phase) should have N athena.py processes using close to 100% CPU, where N is the number of cores. You will sometimes see an extra athena.py process, but this is the "master" process which controls the "child" processes doing the actual simulation. |

©2026 CERN