Message boards : Number crunching : News, Status and Plans, 19th November, 2013

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

After seven and a half years of "retirement" my status as an Honorary Group member of Accelerator Beam Physics in the BE Department has been renewed for a further three years. I have also received about four pages of conditions referring to the Staff Rules and Regulations which I have not yet studied in detail. I must therefore begin by saying that the following expresses my own views and opinions and is not necessarily endorsed by CERN. First, a hot topic from the Message Boards, more work for LHC@home. I quote: "October has been quite busy with several workshops and preparatory activities. Currently we are in a LHC upgrade workshop to discuss the work done and agree the future steps with our collaborators. In the time scale of weeks, we should have other studies coming up for upgrade and simulations of LHC beam dynamics experiments. By the end of November at the latest we should be back in business." I think that is perhaps slightly optimistic as you might gather from my following reports. I would just add that as surmised on the MBs we will NOT submit work just to keep the system busy. I think the MBs also cover pretty well how to work with other projects while waiting for LHC@home. I have submitted a few thousands of jobs to check (again) floating-point reproducibility (see later). Let me just repeat your contributions are greatly appreciated. LHC@home provides us with the equivalent of 25,000 cores, as a very conservative estimate, and hopefully more when we really need the capacity. (For comparison Tier 0 of the GRID at CERN (and Hungary) provides some 120,000 cores but at a cost of tens of millions of dollars and hundreds of man years.) Now, it should go without saying, our primary goal is to evaluate various upgrade scenarios for the LHC. The good news is that a comparison of the actual LHC Dynamic Aperture (DA) with the SixTrack estimates shows an excellent agreement. When I get the paper I shall provide a pointer. This is very encouraging and many of the studies performed used LHC@home for which our thanks. Service status: The service, and how to use it, is now documented. It is still a bit rough around the edges and we have suffered three nasty incidents this year (all our own fault). I produced a dud executable :-(, we had a database hiccup, and we lost the permission for Apache to upload results. This was quickly pointed out by you on the MBs and was finally resolved without any loss of results. We still suffer from the "tail" of incomplete cases meaning it takes a bit long to complete a study in spite of both BOINC and SixDesk options to handle it. It would be good to have an easy way for the physicists and for us to see WU status, WUs queued, position in queue, sorted by User. Overall the reliability is rather good, but we still suffer from perennial disk space problems and the CERN Andrews File System (AFS) which need to be solved rather urgently (see later news on SixTrack). We sill get a very few validated empty results where I get "no_output" from at least two sources (of course both validated because both are null and null is equal to null!. In general, our operations require too much human checking and intervention and are therefore error prone. We need to automate and send an e-mail when say disk space is getting full, or daemons exit etc. I also realise that many would like some graphics on your side and I am thinking in terms of a very simple display of the number of turns completed so far, a running counter. Previous graphics, while appreciated by some, were not realistic and consumed too many resources, as well as making it almost impossible to build across the three (soon to be four?) operating systems concerned. Still we must try and keep it simple as the system is already complicated. SixTrack: A typical study will consist of thousands of WUs, each making a SixTrack run with different initial conditions, round a particular LHC configuration, or lattice, for typically 100,000 or 1,000,000 turns for studying beam-beam effects. Each turn involves about 10,000 steps, each step being one of about 50 different elemnts such as drift, dipole, quadrupole, multipole magnets, cavity, beam-beam etc. The last month has been a bit hectic testing and debugging two new types of element, and there should be a new SixTrack very shortly incorporating them. At the same time I am implementing the return of all the SixTrack result files, including the C/R files, for full functionality, but at the expense of an increase in your Upload time. I am also adding two new parameters NUMLMAX and NUMLCP. NUMLCP will simply specify the number of turns between checkpoints. NUMLMAX will specify the maximum turns per WU. This should allow us to split a million turn WU into say, ten (sequential and dependent) WUs, at the expense of more Upload/Download. In particular this capability would allow us to make studies of up to ten million turns (never been done before) and to study the growth of the floating-point error which may invalidate the results (see later). The results file Sixout.zip is about 2MB to begin but will icrease each time to up to 20MB (estimate to be verified in practice). In the very worst case of ten million turns it might be as large as 200MB; we shall see. The Sixout.zip becomes the Sixin.zip for the next run and so the upload of a WU will also take longer. While this is all rather complicated the rewards are great and I shall give it a try on the Sixtest platform at first. On the medium/longer term, apart from new elements, my colleagues are planning a significant re-structure, and I would get rid of obsolete pre-Fortran 77 features. Remember SixTrack is some 20 years old! I hope this would include writing the important binary data to one file instead of one file per particle pair. (For GPUs I would like to track at least 512 particles in one WU but don't really want to have 256 binary files.) I recently implemented my "own" formatted input conversion, tedious, but necessary, while awaiting Fortran 2003 ROUND options on formatted I/O on which more later. It is shameful that we do not have a one-click installation for the BOINC client for Linux at CERN (Scientific Linux CERN 6, SLC6). The SixTrack documentation needs to be brought up to date. Compilers and Build: After several initial years running happily with Lahey-Fujitsu lf95, which while not guaranteed, provided Linux/Windows compatibility, support for 32-bit systems was reduced and there was no budget (or other interest) to buy the latest release. Intel is a member of the CERN OpenLab and ifort was available, initially free. It was a major effort over months to figure out the options to ensure floating-point consistency and Linux/Windows compatibility. At a recent Webinar on this topic the Intel speaker dismissed the notion of Linux/Windows floating-point compatibility in his opening paragraph! I finally managed to build a BOINC executable on CYGWIN on Windows XP without creating a MS Studio project. Now we are being forced to Windows 7 at CERN. Howver, we do have a few ifort licences and have built for Macs as well. (I bought a MAC at my own expense for testing, but I refuse to buy a licence and install ifort on it.) For several months now we have been trying to create a build platform, a virtual machine on which we can build BOINC executables of Linux, Windows, and MAC. We require the BOINC server-stable libraries and the "new" zip functionality from the trunk if I understand correctly. For two years now I have been unable to use more recent ifort Releases as they lose floating-point reproducibility at levels of optimisation greater than zero. Current testing indicates that this is fixed in the latest Linux version available at CERN. Windows/Linux/MAC compatibility was achieved by using the freely available David M. Gay routines dtoa and strtod for essential formatted I/O, a time consumimg and error prone process. While ifort accepts the syntax for Fortran 2003 ROUND= on formatted I/O testing shows that it is probably not yet implemented correctly. Note that all seven of our executables are 32-bit which run just fine on 64-bit systems. Why double the number of executables and the testing for no obvious gain? See also floating-point reproducibility. All executables are STATIC linked to avoid the use of different libraries (except for MACs???). This option seems to somehow be becoming deprecated but is essential for compatibility. The SixTrack source is maintained in SVN and I have a script make_six which eases the task of building with different physics options, different compilers and compiler options etc etc. On Linux only, I also use other compilers. The NAG nagfor compiler is used for checking code and for standard compliance. Sadly, I cannot complete the checking of use of undefined variables, due to some complicated code in the Differential Algebra routines of SixTrack but I hope to solve that eventually. gfortran now compiles and executes SixTrack correctly. Lahey-Fujitsu lf95 is fine at O0. We recently purchased the PGI compiler suite in order to have support for GPUs and pgf90 works correctly too (but not yet tried any GPU code). Apart, from the Linux only availability, there remains the question of code generation options where, for example, pgf90 offers around 40 target processors! I need to look at gfortran options (gcc?) and I am not sure about nagfor. (I might try g95.) On the medium term I think it would be good to use gfortran on all OS; after all we are a volunteer project and gfortran is free. This would also require installing our own versions of gfortran, gcc and the GNU libraries as updates and changes to them are outwith our control. It might also bw worth trying to establish a collaboration with NAG, for whom I have great respect, at least in terms of testing some Fortran 2003 features and on floating-point reproducibility in general. The top priority is to get a reliable build platform for executables for Windows, Linux, and MACs where we have/need 3 versions, generic, sse2, sse3 (but only sse2 for MAcs).. SixDesk: This is the name given to the set of scripts which allow a physicist to prepare a study, submit WUs, retrieve results and post-process them. As part of the implementation of NUMLMAX, multiple WUs per case, I need to make significant modifications to handle the now complete results, and to manage the multiple subcases. They will all hsve the same name but with _1, _2, etc appended. This is complicated by AFS restrictions on the results file hierarchy, lack of disk space, and long names. Still I think I can have a prototype rather quickly as their is significant interest in making extended runs of more than one million turns. Floating-Point Reproducibility and Error Analysis: Having basically solved this problem, and I believe we are the only project with significant floating-point arithmetic to have done so. I repeat we get bit-for-bit identical results with 5 different Fortran compilers at "any" level of optimisation. My tests this week have failed to reproduce any results differences. yet the cases under test produced different but validated results :-(. Only 5 out of 50,000, good enough for pysics bit not satisfactory. I can never prove my methodology is complete, and i have to now become a MYSQL expert and look at rejected non-valid results as well and the systems which produced them. In the past these were traced to failing machines, usually over-clocked hardware. I need to complete testing and publish. Unlikely this year now. As of today, in adddition to Linux/Windows/MAC compatability I also get identical results on Linux with gfortran O4, pgf90 fast O, nagfor O, and lf95 o0. I need to get the latest crlibm from Lyon and also install the 64-bit version as well as the Round Up (_ru), and Round Down (_rd) in addition to the current Round Nearest (_rn). I can then carry out a Computing Experiment with your help, where we will run a study with the different versions and with the FPU set to do the same rounding. It is hoped that this will give us some idea of the growth and magnitude of floating-point error (as suggested by "reference to be supplied"). I need a special test to check Fortran 2003 implementations of ROUND= which will be am enormous simplification and a big step forward. No more on this for now but it is clearly a topic close to my heart on which I have been working for ten years. In addition to the compiler switches I explicitly disable Extended Precision in the Floating-Point registers, as does lf95 by default, and make sure I do not use FMA or other not always available hardware features. I should also check and report, in the SixTrack output which will now be returned, on both the FPU and the SSE register status. We can use sse beacuse we do not require precise floating-point exceptions and I have verified that results do not depend on flush-to-zero or not. There is a lot of interesting and useful information at http://www.exploringbinary.com/ to which I am indebted and in particular for covering decimal-binary conversions on glibc. There is also more on this topic on my pilot WWW site mcintosh.web.cern.ch/mcintosh/. Performance: Last but not least, I need to study the loss of performance due to the various changes required for numeric compatibility. The worst case would be to compare an old version of SixTrack with ifort at maximum optimisation with the current SixTrack. I have measured that sse2/sse3 gives a factor of two at least speed up. However as often stated, what we need is capacity, and I consider it important to use any of your hardware. GPUs should be used when available but might require significant re-structuring of the code. As a first step when possible I would try a minimum of 512 particles in a WU. (SixTrack has about 5MB of code, 16MB of data, and almost 80MB of BSS for 60 particles. 512 particles would therefore need about 600MB total. This should fit in GPU but how to compile, organise and minimise host/GPU communication?) No promises here, not this year, but I would like to try this also to verify numeric compatibility which would require crlibm for GPU. I personally feel performance is over-emphasised much of the time (in spite of years of optimisation and benchmarking). I shudder to think of all the time spent on handling Extended Precision, debugging, and even wasted due to the floating-point error. I can say this because LHC@home is now providing the capacity we need! Industry is also looking at saving on power and cooling and even at using only necessary precision. I am also keen to try Android and it would be fun to build my own Raspberry Pi cluster for SixTrack. Well one can dream. Enough for now; I am hoping this note will serve as a basis for planning and prioritisation for the next few months as well as clarifying the project status for you. I do try and look at the Server Status and MB's every day and I expect (a lot?) of feedback, which I shall try and digest as part of our planning, bearing in mind that the top priority is the production of valid results in a timely fashion with easy to use procedures. |

|

Send message Joined: 15 Sep 13 Posts: 73 Credit: 5,763 RAC: 0 |

10,000 years ago men stopped teaching their sons how to hunt and kill mastodons because the mastodons all died off... there is no longer any reason for anyone to know how to bring down a mastodon with a pointy stick. 200 years ago men in India stopped making spears for killing tigers because they found guns work a lot better. About 60 years ago they stopped producing steam locomotives because diesel-electric units are far more powerful and more fuel efficient. Now we have a new and better way of achieving compatible results on different platforms. It's called a virtual machine. It's time for you to put away your dream. It was a good dream and a worthy goal 10 years ago but today it's like killing a tiger with a spear. It may be fun, it may give one a sense of accomplishment but it's not something that needs to be done. Get with the times and get with the virtual machine. We are not here to have fun perfecting anachronisms, we're here to crunch as efficiently as possible. And now you're talking about 200 MB uploads. Two very important points on that topic: 1) I will be checking my logs closely and the first time I see a 200MB uncompressed upload from this project I will detach this project permanently and so will thousands of other volunteers. Get the zlib libraries and a version of the server code that can use it before you even think of up/downloads that big. 2) I will not be pleased if I see a 200MB result upload and later fail to verify against a result from one of the other platforms. There is no longer any need whatsoever for that to happen. Do the right thing and start using a virtual machine. 6 bangers are for wussies. |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

10,000 years ago men stopped teaching their sons how to hunt and kill mastodons because the mastodons all died off... there is no longer any reason for anyone to know how to bring down a mastodon with a pointy stick. 200MB is my worst case estimate...we shall see. All input and output is ZIPPED already by SixTrack. But thanks for your feedback. Eric. |

|

Send message Joined: 12 Mar 12 Posts: 128 Credit: 20,013,377 RAC: 0 |

Eric 1. Don't worry about output size of files - it takes as much as it needs. People might be not realising they supply 10x results by 2Mb or 1 result by 20Mb while executing 10x times longer task. Size doesn't matter if its already optimised. Say climateprdiction project has output >50Mb (or 60Mb sometimes, might be up to 182Mb) and executed 400-500 hours. and what? do not listen to trolls please) 2. Using GPU is great idea, while other projects use it a lot, there are some compatibility issues between nVidia and ATI chips. Be aware. 3. As for emailing to whole list of crunchers it would be great idea, probably we agreed to receive messages then signed in for project? 4. Having people with overclocked hardware might be somehow identified? or at least supply tasks to that hardware only to perform minor non-critical tasks if any? or special type of calculations then overclocking doesnt make any sense? 5. Why you put this thread to Chrunch section instead of news if it even has a header News, Plans etc? 6. Thanks for your hard work, much appreciated |

|

Send message Joined: 15 Sep 13 Posts: 73 Credit: 5,763 RAC: 0 |

do not listen to trolls please Don't listen to people who whine repeatedly about lack of Sixtrack work and don't have a clue what "trolls" are saying because they can't read English.

Because the first post of any new thread started in News automatically gets put in the News section of the home page. See how long the first post in this thread is? He didn't want that in the home page News so he put it here instead. 6 bangers are for wussies. |

Tom95134 Tom95134Send message Joined: 4 May 07 Posts: 250 Credit: 826,541 RAC: 0 |

2. Using GPU is great idea, while other projects use it a lot, there are some compatibility issues between nVidia and ATI chips. Be aware. For what it's worth, the present amount of work coming from LHC@home is, in my opinion, does not justify the effort it would require to provide GPU support. Regarding emailing, for some reason items posted in NEWS by the Moderators is not being propagated to the News tab on BOINC. Someone needs to leek into this so that real News items show-up when BOINC starts. If real News posting appeared on BOINC at start-up then maybe these News type items would get the attention they should. I would imagine that most people only look at the Crunch section. |

|

Send message Joined: 26 Sep 11 Posts: 37 Credit: 7,807,848 RAC: 0 |

There may indeed be diverse opinions on the merits of million-turn tasks and 200MB downloads and uploads. Personally I think it's great, but probably not suitable for all my machines. I would be delighted to crunch such tasks on my Intel i7-3770 powered desktop. I also do not expect to be constrained by bandwidth. However, I would probably not want to try the same thing on my Intel Atom powered netbook; particularly if I have to use a lousy wireless connection. I suggest a solution is to split those tasks into a different application type that people can select on the Sixtrack preferences page. Similar to how Einstein@Home has different applications for BRP, Gravitational Wave, and Gamma-ray pulsar searches. Perhaps the default for the large tasks should be turned off. People willing and with the bandwidth to deal with those tasks too can then select them explicitly. |

|

Send message Joined: 27 Sep 08 Posts: 910 Credit: 776,675,600 RAC: 193,050 |

I agree about the GPU work, I don't see much value if you don't need so much compute power? I also agree that giving users options on large/small WU would be desirable, personally 200mb is fine. I never look at the graphics so I don't see that as a value added task, I'm here for the science. Keep up the great work, glad to hear that your research continues to be supported. |

|

Send message Joined: 12 Mar 12 Posts: 128 Credit: 20,013,377 RAC: 0 |

1. As for to GPU or not to GPU there is balance between supply and demand. There are 305k desktops to perform crunching and if we might supply more calculating power then more projects could be done for shorter time if it really needed. 2. Internet speeds these days are amazing and having file sizes like 10Mb or 200Mb doesn't make any sense really unless you on dial-up. Say I have 2Mb/s upload speed and don't see difference in uploading any file size really. If potentially its a problem for other people it have to be clarified with them first. 3. Having splitted tasks for 1M or 100K rounds might be great idea but HOW do users know there are these tasks available if it switched off by default? The simplest might be to have LHC@HOME project keeps 100K and new project say LHC@HOMEBIG or LHC1M@HOME or LHC@1M have 1M tasks and we sign for it who has no issues with upload speed and time. 4. As for graphics display it might be worth to have views on CLIENT side but on SERVER side where you might show us combined results. In real life who ever need to see 67 843th turn of 100K turns of particle? |

|

Send message Joined: 15 Sep 13 Posts: 73 Credit: 5,763 RAC: 0 |

It is shameful that we do not have a one-click installation for the BOINC client Eric, The only thing shameful is that you refuse to give your head a shake and smell the coffee. You can have BOINC running on SLC6 and have all of us running that if you want to. It would take some work as you would need to recompile BOINC on SLC6 and that might require porting some shared libs. You would then provide a VirtualBox VM for download. The VM would have SLC6 installed as the OS and have BOINC for SLC6 installed as an app. You could install just BOINC client and supply an already coded and tested manager that uses an ncurses interface. This approach doesn't need a wrapper and thus avoids many of the problems T4T encounters. Or do what your sister project is doing and send a wrapper and a VM with SLC6 installed to existing BOINC clients installed on Windows, Linux, OSX, AIX, FreeBSD and several other OSs. It requires a wrapper but the vboxwrapper is working very well now and this approach would not require you to recompile BOINC. With either approach you just relax and don't worry about propagation of rounding errors and all that nonsense that you don't have to worry about anymore because all tasks will be running on the same OS. It ain't 1960 anymore. It's 2013. Get with the times, man! PM me for more details, I can help. 6 bangers are for wussies. |

jay jaySend message Joined: 10 Aug 07 Posts: 60 Credit: 837,760 RAC: 0 |

Hello Eric! First of all, thank you for the status report. It gave an in-depth sight of the trials and tribulations of the lhc@home effort. Coming out with exact floating point results on different platforms is amazing. [begin slightly-off-topic] I share your concern about getting quick results back to the physicists. I'm running beta Seti@home and they have a deadline for GPU of about 6 weeks. IMHOP, this is a waste of database resources that follow getting the results from different clients to validate. I applaud lhc@home use of a shorter deadline. I would suggest an even shorter deadline, but doing that can put a limit on the performance of the volunteer's machine. [/begin slightly-off-topic] About the disk space. Is starting a pay-pal donation account within reason? Or is showing due diligence too mind-boggling difficult? Thanks again for the report.. Jay |

|

Send message Joined: 27 Sep 08 Posts: 910 Credit: 776,675,600 RAC: 193,050 |

@Costa some good comments: 3. I'd say the users that have the best computers will get the big WU's as there savvy enough to look at the settings. 4. This is a cool idea. |

Roger Parsons Roger ParsonsSend message Joined: 18 Dec 11 Posts: 17 Credit: 947,327 RAC: 0 |

All this is above my head, Henry, but I will make a layman's comment. Were this a professional setup with adequate funding, a state-of-the-art option might be the right one. As I recall, the BIONC idea was to get grunts like myself to participate in some "Citizen Science", using our very ordinary home set-ups. The impact of large packages would be significant for me - here's my reality. I live "at the end of the line" of an old-fashioned rural telephone system. Broadband is unavailable except as a mobile signal via a dongle in a Yagi antenna on the wall of the house - best when the leaves are off the trees! Up/download speeds are too embarrassing to mention. GPU issues have plagued the running of SETI@home, so I no longer use that option. My take is that if you have relevant expertise you should be taking these issues up with Eric by PM. With respect, I can't see the point in "shouting" on this thread. It only frightens the horses. I am content to crunch whatever comes along in the hope that from time to time we will get some interesting feedback. Maybe LHC needs to put together a small team of knowledgeable folks as a "think tank" to stimulate evaluation of the best options for the future - and maybe you should be the first recruit? "Any fool can appreciate mountain scenery. It takes a man of discernment to appreciate the Fens." [Harry Godwin - pollen analyst - circa 1932] |

|

Send message Joined: 15 Sep 13 Posts: 73 Credit: 5,763 RAC: 0 |

All this is above my head, Henry, but I will make a layman's comment. Were this a professional setup with adequate funding, a state-of-the-art option might be the right one. True but I'm not asking for anything state of the art or expensive. You can always tell what's state of the art and what is not... if I own one or know anything about it then it's run of the mill. As I recall, the BIONC idea was to get grunts like myself to participate in some "Citizen Science", using our very ordinary home set-ups. The impact of large packages would be significant for me - here's my reality. I live "at the end of the line" of an old-fashioned rural telephone system. Broadband is unavailable except as a mobile signal via a dongle in a Yagi antenna on the wall of the house - best when the leaves are off the trees! Up/download speeds are too embarrassing to mention. GPU issues have plagued the running of SETI@home, so I no longer use that option. Nothing I have proposed would exacerbate or alleviate your situation so I wonder if I missed something? My take is that if you have relevant expertise you should be taking these issues up with Eric by PM. With respect, I can't see the point in "shouting" on this thread. It only frightens the horses. Just the other day my friend was telling me the Samurai used to poke holes in their horses' eardrums to deafen them so they wouldn't be so easily spooked in noisy battles. It seems to me the sysadmins, devs and other powers that be at this project have also had their eardrums destroyed, by Samurai for all I know, since nothing anyone says here ever earns a response unless one gets downright nasty, loud and aggressive about it. I'm beginning to think Samurai poked their eyes out as well. The deaf, dumb(frequently) and blind routine has been going on since some time before my puppet-master arrived here in 2008. Yes, I am a lowly sock-puppet. My master was banished forever from this forum though they gladly receive the results of any tasks he crunches for them. If the polite way doesn't get results then there's little to lose by raising one's voice. I am content to crunch whatever comes along in the hope that from time to time we will get some interesting feedback. That's nice of you, Roger. Some of us want more than interesting feedback. Some of us want what the project scientists want which is success. The thing is there are many BOINC projects that want success and there is a scarcity of that which yields success... our CPU cycles. It frustrates many of us when projects waste our CPU cycles (and your CPU cycles too) as it decreases every project's prospects for success. My master is active in a number of projects and sees so many project developers and admins working their butts off to optimise their code and operations for the purpose of getting more done with less. Meanwhile, this project, one of the most important projects there will ever be, plods along with anachronisms everybody else abandoned some time ago. Anything approaching "up to date" at this project was implemented only after a few forward thinking volunteers kicked their butts repeatedly, severely and relentlessly and shamed them all over the BOINC world. Maybe LHC needs to put together a small team of knowledgeable folks as a "think tank" to stimulate evaluation of the best options for the future - and maybe you should be the first recruit? That's one heck of a good idea! And we/they already have it in place. It's called "this forum" and most of the truly knowledgeable folks that I know of lurk here and I suspect many gurus I don't know about lurk here as well. If the project admins want their help/advice/reflections all they have to do is respond in this forum, keep a dialogue going and implement suggestions. It's a process that works very well at numerous other projects and the only reason it doesn't work here is because they appear to not want any help. For example, they have a problem with what's known as "the tail of the dragon". Other projects overcame that problem years ago and a few of us here tried to point them in the right direction. They ignored that help yet they still whine about the tail. 6 bangers are for wussies. |

Roger Parsons Roger ParsonsSend message Joined: 18 Dec 11 Posts: 17 Credit: 947,327 RAC: 0 |

All professions are conspiracies against the laity, Henry. I shall leave the Gurus to come back on your technical points. I was content to offer you the grunt's pure intellect, unencumbered by factual knowledge - though seemingly this forum is not doing what you want it to and a more formal and private set up might be a positive way forward - but if you are happy with things as they are, then so am I. "Any fool can appreciate mountain scenery. It takes a man of discernment to appreciate the Fens." [Harry Godwin - pollen analyst - circa 1932] |

|

Send message Joined: 15 Sep 13 Posts: 73 Credit: 5,763 RAC: 0 |

All professions are conspiracies against the laity, Henry. I shall leave the Gurus to come back on your technical points. I was content to offer you the grunt's pure intellect, unencumbered by factual knowledge - though seemingly this forum is not doing what you want it to and a more formal and private set up might be a positive way forward - but if you are happy with things as they are, then so am I. I've walked on both sides of that fence, Roger, lay as well as professional. There is a divide there, you could say a conspiracy. I'm not happy but not sad/angry either. Thanks for your interest in my concerns as well as this project's concerns. I won't rule out a private setup but the benefit of discussions in the forum is that knowledge gets passed down from the gurus to people I broadly refer to as "moving up". I don't know all the details of what I would like to see happen here but I can get things rolling and if there is a serious discussion then others far more knowledgeable than me will join the discussion. That way novices can learn, people at the intermediate level can advance their understanding, everybody benefits. But if it has to be a private thing then so be it. 6 bangers are for wussies. |

Roger Parsons Roger ParsonsSend message Joined: 18 Dec 11 Posts: 17 Credit: 947,327 RAC: 0 |

Makes sense, Henry. I can imagine how exasperating this development process can be - even though I am on the fringes of it and know virtually nothing about the technical issues. I suspect most LHC number crunchers are "users" like myself rather than "practitioners" like you, and are a somewhat bewildered by debates that might as well be about theology! A common failing amongst experts is they don't begin to grasp quite how ignorant the rest of us are!!!! However I have observed that small groups working in private can often achieve progress that is impossible in open debate - not that there is anything wrong with a good combative exchange. In my own field the cantankerous exchanges of the experts are legendary. "Any fool can appreciate mountain scenery. It takes a man of discernment to appreciate the Fens." [Harry Godwin - pollen analyst - circa 1932] |

|

Send message Joined: 19 Feb 08 Posts: 708 Credit: 4,336,250 RAC: 0 |

Today I got 3 tasks to run and I am happy with this project. I am running 7 BOINC projects on 2 Linux boxes, no GPU, and I am never out of work. I am running Test4Theory@home on one of them vith VirtualBox 4.2.18, SETI@home MB and Einstein@home. This CPU gets Albert@home, SETI Astropulse, CPDN (very long lived) and LHC@home when available. I wish I could run also QMC@home, but they sent me a 64-bit app while my OS are 32-bit SuSE Linux and it crashes immediately. I protested in vain. Such is life. Tullio |

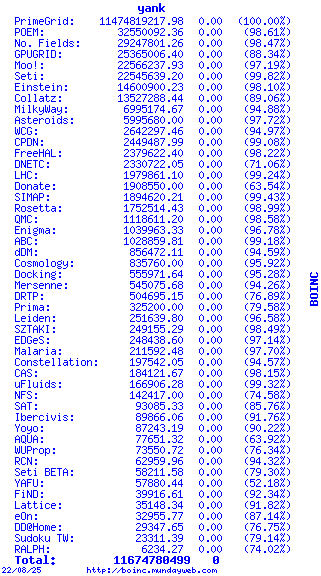

Yank YankSend message Joined: 21 Nov 05 Posts: 64 Credit: 1,979,861 RAC: 0 |

My three machines are receiving many work units. At this present time there are 57,000 work units waiting to be downloaded.

|

Coleslaw ColeslawSend message Joined: 29 Apr 08 Posts: 24 Credit: 5,033,746 RAC: 0 |

My vote is to keep the work units small. Big work units can cause worse network congestion where multiple hosts reside. Small files have less of an impact on users networks even if multiple files need to be returned. Large file may help with designing GPU work, but comes with other consequences. If you are looking to go ARM processing, many people are on tiered data limits. Even my cable internet provider has switched to tiered data. Since many ARM devices have a data limit option, this can be managed on the users side. However, it is a growing concern. Now if the option was there to select whether to run long or short work units like some projects offer, then that would probably be the best solution.

|

©2026 CERN