Message boards : Number crunching : Long WU's

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · Next

| Author | Message |

|---|---|

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

So it is definately something with AP, and probably also something with v6.2.x, but probably only when in combination with AP. I've had other commitments since I wrote my original message last Friday so I'm sorry I'm only just now able to find time to catch up with the various replies. Particular thanks to Richard for pointing out the implications of the client reporting zero run time. I know I'm using an old client but I have particular reasons for doing that. This old client version does report both run time and CPU time for tasks running normally. It's only when AP is invoked that the run time is set to zero. AP has now been removed from all my hosts. It took a while for the caches to drain on the last few of them as they had 100+ tasks on board at the time NNT was set last week. This is apparently a further artifact of the old client and AP combination. When running normally, the limit of 4 tasks per core is enforced. When running under AP the limit disappears and the client keeps receiving tasks whenever it makes a request. I would like to apologise to all those who received zero credit for tasks when paired with one of my AP hosts. Now that none of them ever seem to run the generic app, there is no reason for me to use AP here again. I'm sorry it took a while for me to realise the extent of the problem and that it could be worked around by getting rid of AP. There was one aspect that puzzled me until just now. When some tasks were receiving zero credit, why didn't all tasks, since all had zero run times. I just noticed that those tasks receiving zero credit all seem to be the _0 task which in turn seems to become the canonical result in a two-task quorum. Seemingly, if my task didn't become the canonical result then all was fine. Cheers, Gary. |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

Thanks for that; seems to fit in with Igor's post on Credits. See his latest post Credits, but he will look at all this again tomorrow. |

|

Send message Joined: 9 Oct 10 Posts: 77 Credit: 3,727,865 RAC: 0 |

I just got one more big WU ... estimated to run 260 hours on my old P4-m 1.4GHz ... deadline is on the 28th. |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

I don't really understand why, but should be OK now. Keep me posted. Eric. |

|

Send message Joined: 9 Oct 10 Posts: 77 Credit: 3,727,865 RAC: 0 |

Unfortunately, it won"t make it ... only two days remaining to the deadline, and still 145 hours of calculation to go :( |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

Well I am really not sure that the deadline won't be extended..... If not my apologies. I have got 999.9% of these back now, but then again maybe they were from "fast" machines. We will be doing a full post-mortem and are studying how to do better next time with lots of great feedback and suggestions. |

|

Send message Joined: 29 Nov 09 Posts: 42 Credit: 229,229 RAC: 0 |

Report deadline: 30 Aug 2012 | 8:55:53 UTC Received: 30 Aug 2012 | 14:16:05 UTC Validate state: Valid Look like I got lucky on this one. So it was ok not to cancel it after all. |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

Well I am not so sure about luck. As I said we shall be looking at this next week and I'll get back to you (and others) |

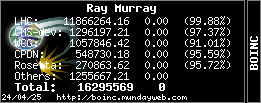

Ray Murray Ray MurraySend message Joined: 29 Sep 04 Posts: 281 Credit: 11,888,115 RAC: 0 |

I seem not to have chosen my wingmen for the long WUs wisely 8¬( Of the 8 I have completed, only 2 wingmen have returned successfully with 1 still in progress. 2 errored out (pages and pages of errors for this host , 2 missed deadline (30 missed deadline on this host)and 1 aborted (aborted all his long ones). The 5 unreturned ones are all still listed as Unsent. Just wondering if they will be resent for my results to get validated or if the Long Wu experiment is complete for now?

|

Magic Quantum Mechanic Magic Quantum MechanicSend message Joined: 24 Oct 04 Posts: 1264 Credit: 93,372,680 RAC: 110,613 |

I just sent in my long task and it is validated too. http://lhcathomeclassic.cern.ch/sixtrack/result.php?resultid=6347063 Volunteer Mad Scientist For Life  unbelievable are you trying to promote linux again? |

jujube jujubeSend message Joined: 25 Jan 11 Posts: 179 Credit: 83,858 RAC: 0 |

Well I am not so sure about luck. As I said we shall be It has nothing at all to do with luck and everything to do with skill :-) Volunteers need to learn that it is extremely difficult, if not impossible, to schedule tasks properly for projects that have huge variations in run times therefore it is of utmost importance to configure an extremely small cache of no more than 0.1 days. Volunteers also need to remember that it's not the end of the world just because a Sixtrack task goes into panic mode and suspends some of their other projects for a while. Panic mode simply borrows some time from other projects but those projects get paid back and the project shares the volunteer specifies will be honored over the long run. But, for that to work and ton ensure that the other projects' tasks don't miss their deadlines, volunteers absolutely MUST CONFIGURE A SMALL CACHE, ESPECIALLY IF THEY HAVE A SLOWER CPU. |

|

Send message Joined: 19 Feb 08 Posts: 708 Credit: 4,336,250 RAC: 0 |

I should have two tasks running on my laptop. One is running and should meet its deadline. The other, workunit 2828795, does not appear on my BOINC manager. Was it a ghost unit? Tullio |

|

Send message Joined: 19 Feb 08 Posts: 708 Credit: 4,336,250 RAC: 0 |

OK, I got it. The laptop's BOINC manager is in Italian and sometimes its messages are not clear to me. English is much clearer. Tullio |

|

Send message Joined: 12 Jul 11 Posts: 857 Credit: 1,619,050 RAC: 0 |

Thanks for that; I think we shall have an interesting (long) discussion next week! |

|

Send message Joined: 27 Oct 07 Posts: 186 Credit: 3,297,640 RAC: 0 |

Thanks for that; I think we shall have an interesting (long) discussion next week! The usual rule of thumb isn't quite as drastic as jujube suggests: Look at all your projects, find the one which had the shortest deadlines, and divide that deadline by the number of different projects you're attached to. So, with deadlines here being 7 days, that's probably your shortest. If you're attached to 3 or 4 projects, a 2 day cache might be OK: if you're attached to 7 or 8 projects, don't set a cache above 1 day. |

jujube jujubeSend message Joined: 25 Jan 11 Posts: 179 Credit: 83,858 RAC: 0 |

Thanks for that; I think we shall have an interesting (long) discussion next week! Will that rule of thumb work for slow hosts with old P4 or Athlon64 processors? How about P3 machines? I mean you used the word "usual" which means it might not apply in all cases so are there cases where it's advisable to set the cache even smaller, for example for P3 or P4 era hosts? Perhaps for slow hosts it would be advisable to cut the cache size in half again? Also, based on other volunteers' experiences and reports it seems to me your "usual" rule of thumb tends to fail when one of their projects issues tasks that are very much longer than their usual tasks. I'm not sure exactly why but that's seems to be how it works. Any comments on that? |

|

Send message Joined: 27 Oct 07 Posts: 186 Credit: 3,297,640 RAC: 0 |

Will that rule of thumb work for slow hosts with old P4 or Athlon64 processors? How about P3 machines? I mean you used the word "usual" which means it might not apply in all cases so are there cases where it's advisable to set the cache even smaller, for example for P3 or P4 era hosts? Perhaps for slow hosts it would be advisable to cut the cache size in half again? I think the rule of thumb - which I quoted, but did not originate - dates from even before the era when P4s and Athlons ruled the world. Also, based on other volunteers' experiences and reports it seems to me your "usual" rule of thumb tends to fail when one of their projects issues tasks that are very much longer than their usual tasks. I'm not sure exactly why but that's seems to be how it works. Any comments on that? All absurdly general assertions need an exception, and I think you've put your finger on it. That rather depends whether you regard Eric's occasional experiments as an excitement, warranting manual intervention and micromanagement: or whether you prefer a totally fail-safe configuration, where 'auto' mode can cope with every eventuallity. And it depends how reliable the other projects are, too. I'm a close observer, willing to step in and micromanage when needed: so when I saw a 'long' task waiting eighth or ninth in line on a Q6600 with 2 days' cache, I bumped it to start running next: your strategy would have alowed BOINC to do that by itself. Horses for courses. |

|

Send message Joined: 9 Oct 10 Posts: 77 Credit: 3,727,865 RAC: 0 |

The WU is still being processed : 170 hours done, still 16 to go. Luckily, the task has not been reassigned yet, so I might be able to return it and get full credits for it, and the work won't be duplicated :) |

|

Send message Joined: 19 Feb 08 Posts: 708 Credit: 4,336,250 RAC: 0 |

My cache is very small,0,25 days, and I rarely have more than one task of each of my 7 projects running. Only climateprediction.net gave me 2 tasks on the SUN WS but it has deadlines which are biblical in time so that is not a problem. On this laptop, running 4 projects and hibernating at night, I have to put NNT when a project sends me another task before the preceding one is finished. All my projects are equally shared, only Test4Theory@home runs on both system and has overtaken the RAC of deceased QuantumFIRE Alpha, which still appears in BoincStats. Ttullio |

jujube jujubeSend message Joined: 25 Jan 11 Posts: 179 Credit: 83,858 RAC: 0 |

Will that rule of thumb work for slow hosts with old P4 or Athlon64 processors? How about P3 machines? I mean you used the word "usual" which means it might not apply in all cases so are there cases where it's advisable to set the cache even smaller, for example for P3 or P4 era hosts? Perhaps for slow hosts it would be advisable to cut the cache size in half again? There have been many changes to the scheduler since then. Perhaps the rule of thumb needs to be revised. Also, based on other volunteers' experiences and reports it seems to me your "usual" rule of thumb tends to fail when one of their projects issues tasks that are very much longer than their usual tasks. I'm not sure exactly why but that's seems to be how it works. Any comments on that? I like to keep a close eye on BOINC but often I have to ignore it for a few days. I don't mind a little manual intervention but some volunteers have even less time for BOINC watching than I do and I can appreciate their desire for a totally fail-safe configuration. Alas, there probably is no such thing as a totally fail-safe configuration given the level of unpredictability (chaos?) when attached to several projects. One never knows what curve ball Project XYZ is going to throw at the scheduler next and that's why I recommend a very small cache of 0.1 days if one wants it to work as automatic as possible. I also strongly encourage projects to make sure task deadlines suit the maximum duration of their tasks. In that regard, I do feel Sixtrack dropped the ball with the very long tasks issued recently. I would ask that the admin(s) issuing the tasks also understand how deadlines are configured on the server and be willing to "setup" the deadline before issuing a batch of unusually long tasks. Issuing long tasks then emailing the other admin a request to adjust the deadline accordingly is not the way to do it, if that's what's been happening. Warn the other admin first and don't issue the long tasks until the other admin indicates appropriate adjustments have been made. The left hand needs to know what the right hand is doing at all times. As I said, from the client's perspective there is a great deal of chaos in the system when you're attached to several projects so every project needs to make sure the info they send to hosts regarding their tasks is appropriate/accurate. Projects that cause problems get set to NNT very quickly and are returned to "active duty" not so quickly. I would also like to remind Eric that BOINC does not revise the deadline, there was some speculation that it does. BOINC does revise the estimated duration of a project's tasks when it discovers a project's tasks are longer or shorter than expected. The deadline, however, is sacred and is never adjusted by BOINC client or server. I'm a close observer, willing to step in and micromanage when needed: so when I saw a 'long' task waiting eighth or ninth in line on a Q6600 with 2 days' cache, I bumped it to start running next: your strategy would have alowed BOINC to do that by itself. Horses for courses. Indeed my strategy did exactly that. The downside of my strategy is that if I lose my Internet connection for very long I run out of work rather quickly. Fortunately my ISP is extremely reliable and power outages in my area are extremely rare. I appreciate that some volunteers' ISP are very unreliable so a small cache may not be appropriate for them. |

©2026 CERN