Message boards : Number crunching : SOME greedy users

Message board moderation

Previous · 1 · 2 · 3 · Next

| Author | Message |

|---|---|

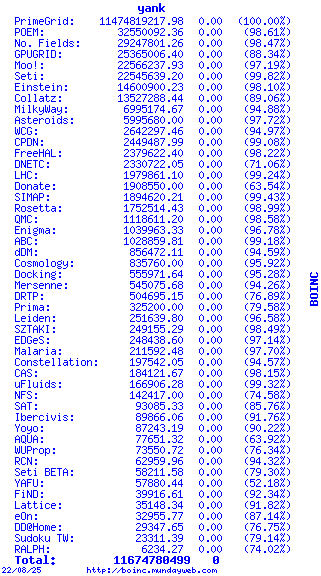

Yank YankSend message Joined: 21 Nov 05 Posts: 64 Credit: 1,979,861 RAC: 0 |

The last time LHC had units to work on, about 5 or 6 days ago one of my three computer got about 20 to 30 units ( I didn't count them). The other two didn't get any. Just SETI and LHC are the only working programs on these machines and they share 100% computer time. I have a five day cache. The units I have still working on the LHC program have a completion time of about 5 hours +/-. Since LHC has a completion return date sooner than SETI, LHC are being completed first, two units at a time. I think the machines are running like they should, but with all this talk of a few people being greedy in the download of work units I was concerned that perhaps my machines were taking too much and was wondering just what is too many units?

|

|

Send message Joined: 7 Oct 06 Posts: 114 Credit: 23,192 RAC: 0 |

What if there is also a human element at work behind the server? :-) the human element has been high lighted from the crunchers side :-) but what if, some human brain behind the server likes the way certain machines crunch? :-) then what is stopping them from showering WU's on their green list machines? like the machines which complete WU's before the rest :-) any comments? Regards Masud.

|

|

Send message Joined: 4 Oct 06 Posts: 38 Credit: 24,908 RAC: 0 |

Yank, another possible solution to consider: Since you're only crunching the two projects (SETI and LHC), could you set a large resource share in favor of LHC (1000:1 or 100:1) effectively using SETI as a backup project. Then when LHC does have work that's all that host will crunch and it will only take as long as your cache is set for to finish them (since it will switch to LHC full time because of high resource share). I crunch 5 other projects aside from the two intermittent projects (LHC and SIMAP). LHC and SIMAP have 1000 resource share each and the other 5 projects have 100. So when neither LCH or SIMAP have work, each of the 5 still get equal shares. When either LHC or SIMAP have work, it crunches those full time. If both have work then they each get 50% (roughly). Don't get distracted by shiny objects.

|

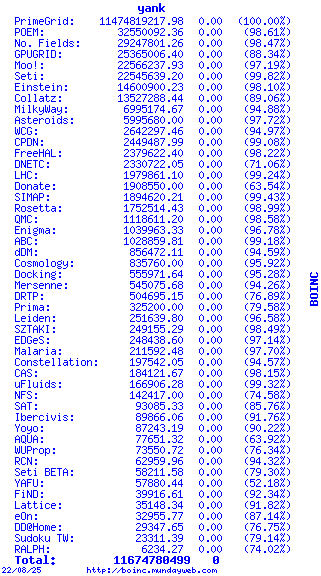

Yank YankSend message Joined: 21 Nov 05 Posts: 64 Credit: 1,979,861 RAC: 0 |

Ok, LARRY1186, I will try to figure out how to do what you suggest.

|

|

Send message Joined: 18 Sep 04 Posts: 5 Credit: 31,262 RAC: 0 |

All the complaining is like buying a Jeep and then demanding it must go 150mph. It just doesn't work, because it isn't designed to do that. Falcon you totally miss the point. The complaint is NOT the lack of an endless stream of work. The complaint is not being unable to aquire work because a number of users have deep caches - which locks work away for days that others could be doing. |

clownius clowniusSend message Joined: 1 May 06 Posts: 34 Credit: 64,492 RAC: 0 |

I have a short 0.2 day cache myself and have received work regularly recently. Not heaps of work but enough to keep LHC ticking over constantly. IF you lower the amount of work each user can receive and we get another batch of 5-10 second WU's (this does happen regularly) we might run out of ready crunchers before we run out of work. Things work now if people stop micromanaging their BOINC and let it do its thing you will get WU's.

|

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

The last time LHC had units to work on, about 5 or 6 days ago one of my three computer got about 20 to 30 units ( I didn't count them). The other two didn't get any. Just SETI and LHC are the only working programs on these machines and they share 100% computer time. I have a five day cache. Getting 30 WUs with a five day cache is pretty much what you would expect to happen. I'm very surprised that your other two machines didn't get any as the work was available for quite a long time. Those machines must have been on a very long backoff (more than 24 hours) to have missed out completely if Seti was your only other project. Alternatively, your Seti cache may have been overfull for some reason. The units I have still working on the LHC program have a completion time of about 5 hours +/-. Since LHC has a completion return date sooner than SETI, LHC are being completed first, two units at a time. I think the machines are running like they should, Yes, if you have a dual core (?HT) machine doing two units at a time every 5 hours you will have no problem completing the work within the deadline. Hovever, for LHC with its relatively short deadline, a 5 day cache is too big. Larry1186 has given you very good advice about structuring your resource shares to favour LHC and you can do this by changing the resource share value in your project specific preferences (not general preferences) on each relevant website. With both LHC and Seti having (different) issues with work supply, it is quite likely that both could be out of work simultaneously. To guard against this it would be useful (just like larry1186 has done) to have at least one more project in the list. Einstein@Home (EAH) would be a good choice if you are into physical sciences. You could set up a resource share LHC/Seti/EAH of 900/50/50 and a cache size of say 2.5 days max which should work very well. Seti and EAH would share your machine equally and back each other up for those periods where there was no LHC work. but with all this talk of a few people being greedy in the download of work units I was concerned that perhaps my machines were taking too much and was wondering just what is too many units? The talk is just that -- talk. A capable machine getting 30 work units is not being greedy. You will finish and return the work well within the deadline so there is no problem. Too many WUs is the situation where you cannot complete the work within the deadline (ie if your machine is not running 24/7 or you have too much work from other projects as well. BOINC will try very hard to not let this happen in the first place. You can help BOINC by reducing your cache size as suggested. Cheers, Gary. |

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

Actually, I'm sorry to say that I think it is really you who is the one missing the point. The point really is the patchiness of the work because there wouldn't be any complaints about greedy users with deep caches if there was a continuous supply of work. Also, I think you meant "...not being able..." rather than "...not being unable..." :). It would be mind blowing to see users complaining if they indeed were able (ie not being unable...) to get work :). Cheers, Gary. |

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

But what's the solution, having constant work, or not having greedy users? Also, I think you meant "...not being able..." rather than "...not being unable..." :). It would be mind blowing to see users complaining if they indeed were able (ie not being unable...) to get work :). Ohh so he says you're wrong (true or not), and you get pedantic about grammar, trying to find the most little details where he is wrong? How nice... |

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

I'm sorry but I don't understand what you are on about. He didn't say I was wrong - in fact he has never responded to me at any point that I'm aware of. He made a statement saying that FalconFly was missing the point and I responded to him to question the notion of who was actually missing the point. I must say I enjoyed your treatise on grammar pedantry. There is nothing about grammar involved here. The grammar is actually correct, although many people do have problems properly handling the meaning when double negatives are used :). It's just that his words have the totally opposite meaning to what I think he was trying to say. So you decide to get involved and stir it all up with a fallacious claim about pedantry... How nice... Cheers, Gary. |

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

Actually, you only need a solution if there is a problem and I don't think there really is a problem. Certainly not a problem that needs to be solved urgently as far as the project staff is concerned. By now everybody should know that work is patchy and unreliable. Any person concerned about this should make it a priority to research the strategies that could be used to improve the chance of getting appropriate work when work is available. My opinion is that there really isn't this large bunch of greedy users with huge deep caches that are immediately exhausting the work as soon as it appears. If there were, why is it that the work released around 30/31 December took well over a full day to be exhausted? Cheers, Gary. |

|

Send message Joined: 24 Nov 06 Posts: 76 Credit: 10,211,769 RAC: 0 |

Don't forget about Long Term Debt. Just leaving machines attached to this project, when there is no work, will cause BOINC to concentrate its resources on this project to "make things right" compared to the other projects attached. Dublin, California Team: SETI.USA  |

|

Send message Joined: 22 Sep 05 Posts: 21 Credit: 6,650,380 RAC: 1,431 |

I agree with PovAddict's comment about the nitpicking. Try and realize that we all don’t have the same home countries and native languages. I still find it entertaining to read theregister.co.uk just to be exposed to some of the Queen’s language and humor. If there’s a disagreement / misunderstanding maybe think about rephrasing the question/comment another way. I’ve been following the threads to see if there’s any news about lhc being switched over the QMC’s control and it’s a turnoff read these threads when they’re outright attacks. I still look just to see if there’s a tidbit of useful information. William

|

|

Send message Joined: 7 Oct 06 Posts: 114 Credit: 23,192 RAC: 0 |

I like this idea of long term debt. I have given more resources to LHC and to nullify this debt problem Boinc starts hunting at LHC. My cache is set 1.5 days and its not deep. I am afraid that Deep caches only lead to missed deadlines So i only get five WU's of LHC but as the machine is connected 24/7 i have noted one project being uploaded and at the same time Boinc is getting another one from LHC. Regards Masud. Don't forget about Long Term Debt. Just leaving machines attached to this project, when there is no work, will cause BOINC to concentrate its resources on this project to "make things right" compared to the other projects attached.

|

Bruno G. Olsen & ESEA @ greenh... Bruno G. Olsen & ESEA @ greenh...Send message Joined: 17 Sep 04 Posts: 52 Credit: 247,983 RAC: 0 |

ha, I might have looked greedy :D By accident, due to changes in cache size, suspending/resuming projects, and an unfortunate timing of scheduler requesting work, I ended up with a huge bunch of wu's that I new I wouldn't be able to finish in time - and if I tried other wu's wouldn't finish in time. So I had to abort a large amount of them. That would make others able to grab some - only side effect would be that they might have to wait for a re-issue

|

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

ha, I might have looked greedy :D By accident, due to changes in cache size, suspending/resuming projects, and an unfortunate timing of scheduler requesting work, I ended up with a huge bunch of wu's that I new I wouldn't be able to finish in time - and if I tried other wu's wouldn't finish in time. So I had to abort a large amount of them. That would make others able to grab some - only side effect would be that they might have to wait for a re-issue When the server receives an aborted workunit, or one with a computation error, it gets resent immediately (unless there is already a quorum). Well, in fact it gets added to the "ready to send" queue, like with any other workunit it may take some time to actually get sent to a computer. |

FalconFly FalconFlySend message Joined: 2 Sep 04 Posts: 121 Credit: 592,214 RAC: 0 |

Falcon you totally miss the point. I know and fully understand that point. Still - regardless of Cache size - getting WorkUnits or not remains on one common basis : pure luck Therefor, the chance of a "short-cache" machine to get work is almost the same as for a "hughe-cache" machine. If the work is passed out while the affected System is deferring communication for x hours/days, cache doesn't matter. The only difference is : the short-cacher missed like 7000s of work, the long-cacher missed 70000s of work... but both miss. The few lucky ones (as you can read all over the Forum) are a big mix of all cache-sizes (most actually being rather small due to personal preferences). Additionally, I would ask you to consider one further, related point : Do you (or anyone) think it is reasonable to ask Users, many of which are likely active in several other projects, to reduce their Cache-size just because of a few unhappy users of a single Project ? After all, reducing a selected cache size may have implications unknown to you or me, as people have very personal requirements. Even if the few long-cachers would change, looking at the numbers, it appears that the largest portion of Work is returned rather quick. Only a limited fraction is dragged closer to its deadline limit, indicating the majority is already using rather small caches and returning work significantly before the deadline. And overall, it seems clear that even with every single User choosing minimum Cache size, the Project - given the amount of work available per batch - is far too overpowered. It would still leave Users without Workunits - there are simply too many active Hosts available (72359 as of now, 40% active would still be like 30000 - too many hungry machines for e.g. 60k Workunits to last for everyone). Bottom line : While it looks very attractive and conclusive at first sight, the impact of Users with large Cache Size isn't as large as it seems, its reduction could not solve the problem (just improve it a little bit). The only spot where I fully accept and support your point is for Users that fill up machines that eventually cannot complete the work within the deadline (either due to performance or insufficient uptime). ------------- IMHO the whole situation will only change to the better for everyone until the proposed changes are made and more work is avaiable for the hungry masses. Scientific Network : 45000 MHz - 77824 MB - 1970 GB

|

|

Send message Joined: 21 May 06 Posts: 73 Credit: 8,710 RAC: 0 |

Is it still the case that LHC does not currently grant credits for work done? There was a rumor to that effect. Phil |

![View the profile of [SETI.USA]Tank_Master Profile](https://lhcathome.cern.ch/lhcathome/img/head_20.png) [SETI.USA]Tank_Master [SETI.USA]Tank_MasterSend message Joined: 17 Sep 04 Posts: 22 Credit: 983,416 RAC: 0 |

They are granting credits just fine. Its the XML exporting that it broken so other sites that show your stats arent getting updated. This will be fixed after the handover of the server to new management.

|

|

Send message Joined: 7 Jul 06 Posts: 4 Credit: 196,678 RAC: 0 |

Hi, like KAMasud have said before in this thread, I think that there is another point of view to this problem. I think the problem cannot be resolved entirely limiting cache, WUs per day, Long Term Debt, etc. I think the project can do something else: well, looking in the URL "http://bluenorthernsoftware.com/scarecrow/lhcstats/" we can see that the 80% of the WUs are crunched in 3 days and more than 90% are in 4 days. Those data are for the last batch of WUs launched at 03/01/07 between 18:00 and 24:00 and is still running. I think is a waste of time for the project to "wait" another 3 or 4 days to receive the 10% remaining results. Surely this WUs initially has been gone to slowly or intermiten or greedy crunchers and that induce the delay in the return of results. I don't know if there is a posibility for the project to relaunch WUs when the number of remaining results decay below a certain limit, say 20% or 10%. In this way, crunchers that are waiting for work will get WUs and they will finish the job in hours instead of days. All happy, the project finish sooner and crunchers have more work. :) Yes, I know there are other implications on doing that (for example, it's possible that this would be considered as a punishment to the "slowly or intermiten" crunchers as it implies "categorize" of crunchers) but I think it would be a solution to projects like LHC, nanoHive, ... I think all of them have the same delay "problem" for the last 10-20% of WUs. Of course, this would be applicable if the project would have a new batch waiting to the previos to finish. :) Another question is if LHC is enterely affected by that. For example, by the time I'm writting this post 715 WUs remains in progress, but I believe that most of them already reached the quorum of 3. If so, why can't be launched the next batch? :) I'm very novice in this project and perhaps I'm saying senselessness...

|

©2026 CERN