Message boards : Number crunching : SOME greedy users

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 22 Jan 06 Posts: 21 Credit: 125,903 RAC: 0 |

Looking at many users beeing greedy. No WU s left for normal users. Cant tere be a limit on seconds collected per pc. |

sysfried sysfriedSend message Joined: 27 Sep 04 Posts: 282 Credit: 1,415,417 RAC: 0 |

Looking at many users beeing greedy. As of right now, there still is work available... And it has been for more than 5 hours... Where is the problem? There is a 500 WU's per cpu & Deadline limit which won't let people download 10.000 wu's..... Sincerely, Sysfried

|

Ocean Archer Ocean ArcherSend message Joined: 13 Jul 05 Posts: 143 Credit: 263,300 RAC: 0 |

Sysfried -- Maybe the individual(s) in question did like so many others during the holiday season -- expected that there would be no work forthcoming from LHC, and loaded up on other projects/tasks. Now, when work shows up, they cannot partake due to their equipment being "overcommitted". Just another reason to set your BOINC manager properly - and let it do it's job .... If I've lived this long, I've gotta be that old |

sysfried sysfriedSend message Joined: 27 Sep 04 Posts: 282 Credit: 1,415,417 RAC: 0 |

... Agreed!

|

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

Like I said a hundred times, cache limit. No more than X workunits in progress per CPU. That will solve the problem. |

Ocean Archer Ocean ArcherSend message Joined: 13 Jul 05 Posts: 143 Credit: 263,300 RAC: 0 |

PovAddict -- OK, I'll accept, for this discussion, that some limit must be imposed, but what criteria are you suggesting to use to accomplish this? For example, I have four machines that run fairly slow (8hr/WU) and one that runs somewhat faster (2hr/WU). Assuming I dedicate all my machines to work LHC and nothing else, this would give me a total throughput of 24WU/day. As of right now, all the WUs coming from LHC have a return date of 7 days from date of release, so if everything runs perfectly, I could process a total of 168 WUs during that time. In reality, during a week's worth of runtime, my actual throughput is about 1/3 of that amount, because of other projects/tasks. I am by no means the fastest cruncher out here - nor am I the slowest, and almost any method that a programmer can come up that controls how much work is allocated and to whom can be circumvented. In short, the different projects have enough problems trying to keep their work running on the meager budgets and grants they have - there's no extra money out there for "WU Police" If I've lived this long, I've gotta be that old |

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

I'm not saying limit per day. If limit is 10, and you get 10, as soon as you return one back you can download another. It's how many you can have on your cache at the same time. For dialup users, 10 would be too small though. |

|

Send message Joined: 22 Jan 06 Posts: 21 Credit: 125,903 RAC: 0 |

Like I said a hundred times, cache limit. No more than X workunits in progress per CPU. That will solve the problem. i prupose a seconds of work per x , atleast for now. Until more regular work is available. |

|

Send message Joined: 22 Jan 06 Posts: 21 Credit: 125,903 RAC: 0 |

Looking at many users beeing greedy. 500 is alot.!!!!! deadline limit is ineffective. |

FalconFly FalconFlySend message Joined: 2 Sep 04 Posts: 121 Credit: 592,214 RAC: 0 |

Regardless of whatever limit is posed on WorkUnit distribution, here's an advice : If you start looking like this watching or awaiting WorkUnits on your PC  ...then it's time to relax, close your BOINC Manager and chill out... Seriously. If you're lucky to catch work while it's there... great. If not... better luck next time. All the complaining is like buying a Jeep and then demanding it must go 150mph. It just doesn't work, because it isn't designed to do that. LHC is exactly the same... As of now it isn't designed to have an endless stream of WorkUnits pouring out, that's the type of Project you joined. And for as long as this [design] is not changed, all the demands and complaints are absolutely futile (Don Quichotte comes to mind). As the staff is preparing to change the design and have a rather continuous work supply, it becomes even less and less likely that they accomodate the unlucky folks that did not manage to snatch some WorkUnits with the upcoming changes. At this point, I would even go as far as to suspect they would 'recycle' previously done batches of Work just to keep the troops happy. (this unfortunate principle has been proven to work, e.g. SETI) Scientific Network : 45000 MHz - 77824 MB - 1970 GB

|

sysfried sysfriedSend message Joined: 27 Sep 04 Posts: 282 Credit: 1,415,417 RAC: 0 |

MY PRECIOUS!!!!!! LHC WUs!!! ;-)

|

|

Send message Joined: 24 Jul 05 Posts: 35 Credit: 19,384 RAC: 0 |

I currently crunch for 14 projects including LHC on a single 1 CPU PC. I managed to get a single WU......it's just the luck of the draw. I would have got more and could've if I'd suspended all the other projects so only LHC was active, but I didn't. www.chris-kent.co.uk aka Chief.com |

Logan5@SETI.USA Logan5@SETI.USASend message Joined: 30 Sep 04 Posts: 112 Credit: 104,059 RAC: 0 |

[quote]MY PRECIOUS!!!!!! LHC WUs!!!No.... like this: MY PRESCIOUSSSSSSSS!!!!!! LHC WUsssssssss!!! lol.. ;)  |

Logan5@SETI.USA Logan5@SETI.USASend message Joined: 30 Sep 04 Posts: 112 Credit: 104,059 RAC: 0 |

dupe post... Sorry! |

siifred siifredSend message Joined: 12 Dec 05 Posts: 2 Credit: 90,559 RAC: 0 |

Looking at many users beeing greedy. Interesting thread after I said that I have 6 that I can't finish in time. But what do you mean by 500 WU per cpu, to sound stupid, lifetime? I too only get a few WU between my 2-3 computers. Only by accident that I even get any when they open. I was a little surprise that these WU seem to take a little long this time. siifred |

Morgan the Gold Morgan the GoldSend message Joined: 18 Sep 04 Posts: 38 Credit: 173,867 RAC: 0 |

My machines do them 1 at a time when they are around, if they aren't available, they do something else. If I wanted to do more lhc, I'd buy another pc .I understand the scientists want the results back a.s.a.p. the problem with limiting the #/day or concurrent is that someone always has good reason (this pc did 878 wanless wu yesterday) and if I was on dialup connecting once a week, or running a farm of 8 machines from 1 (using usb drive) those limits would be inadequate so it is up to the cruncher in the end, to be responsible, and if they are going to be irresponsible lets hope they dl 500 wu instead of drink & drive  |

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

[quote]Interesting thread after I said that I have 6 that I can't finish in time. But what do you mean by 500 WU per cpu, to sound stupid, lifetime? /quote] 500 WU per CPU per day. a.k.a Daily Quota. Also, the limit is reduced for each bad WU you return. I think it's -1 for each computing error, and x2 for each good WU, but never going over 500. |

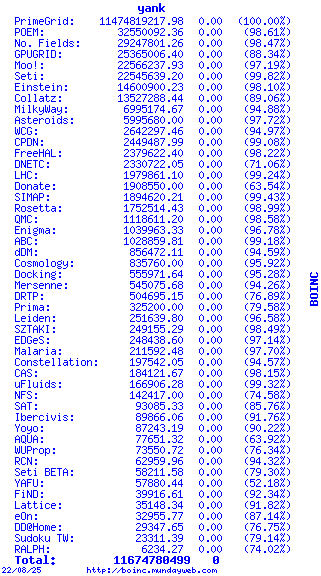

Yank YankSend message Joined: 21 Nov 05 Posts: 64 Credit: 1,979,861 RAC: 0 |

I really don't want to be known as greedy user, however running 9 computer and having three of them listed with LHC and other programs and when I am open to a download and LHC is open to receive any units from LHC, which is not offen,how do I actually stop receiving too many units of work (and what is too many units)? I do know that when I receive LHC units my SETI units take second place due to completion time as LHC units usually have a short cpmletion return time. I have never counted those units coming in but lately it seems there have been many and many of them have been having a quick return time as well as a quick completion time. If you are not connected with any other team perhaps you could join the US NAVY Team.

|

|

Send message Joined: 14 Jul 05 Posts: 275 Credit: 49,291 RAC: 0 |

I do know that when I receive LHC units my SETI units take second place due to completion time as LHC units usually have a short cpmletion return time. You might have a DCF problem. Stop BOINC, edit your client_state.xml to have <duration_correction_factor>1.0</duration_correction_factor> for LHC instead of whatever number it has now. I'd explain better but I got to go now... |

Gary Roberts Gary RobertsSend message Joined: 22 Jul 05 Posts: 72 Credit: 3,962,626 RAC: 0 |

I do know that when I receive LHC units my SETI units take second place due to completion time as LHC units usually have a short cpmletion return time. The above is possibly quite bad advice if you don't do some basic checks first. If your actual result completion time for the normal full running work unit is approximately the same as the predicted time shown before crunching starts, there is absolutely no need to change the DCF. In any case, the BOINC client will adjust the value as required, over time, even if it is a long way off. By editing client_state.xml by hand, you are taking the risk that a simple typo mistake on your part could cause you to trash your whole cache of work units. Please realise that you will always get a burst of work units when an out-of-work project suddenly gets work again. The critical factor in this case is the 'connect to network' setting in your general preferences. Seti was mentioned as a coexisting project so I'm guessing that a cache size of several days was in force to guard against times when Seti is having problems. As an example of what can happen, let us assume just two projects, Seti and LHC, with a 50/50 resource share and a cache size of 5 days. This might be considered fine for Seti and there might be times when you could need this large a cache to prevent running out of work if LHC was in its 'dry' period and you had no other projects to fall back on. If LHC suddenly gets work, BOINC will download effectively 10 days work, ie a full 5 day cache for LHC on top of a 5 day Seti cache. The problem would be magnified if the LHC share was less than 50/50. If the ratio were 80/20 in favour of Seti, BOINC would still download 5 days of work for LHC which could theoretically take 25 days to complete because of the 20% resource share. BOINC has mechanisms to protect against this by deciding that the computer is overcommited and by using EDF mode to clear the 'at-risk' work. From the description given in Yank's post, I'm guessing that the solution is to lower the 'connect to network' setting a bit if there is too much work being downloaded when LHC suddenly has new work. However Yank needs to give a lot more information to be sure about this. Cheers, Gary. |

©2026 CERN