Message boards : Number crunching : Work available, but project is still down!

Message board moderation

| Author | Message |

|---|---|

The Gas Giant The Gas GiantSend message Joined: 2 Sep 04 Posts: 309 Credit: 715,258 RAC: 0 |

40910 wu's available on front page, but my comp says proejct is still down. Bugger! Paul (S@H1 8888)  BOINC/SAH BETA BOINC/SAH BETA

|

|

Send message Joined: 17 Sep 05 Posts: 60 Credit: 4,221 RAC: 0 |

1/13/2006 11:29:28 PM|LHC@home|Message from server: Project is temporarily shut down for maintenance 1/13/2006 11:29:28 PM|LHC@home|Project is down 1/13/2006 11:29:29 PM|LHC@home|Deferring communication with project for 58 seconds The reason for the project being down seems to be self explanatory! Wonder when it will come back up?????????? |

Jim Baize Jim BaizeSend message Joined: 17 Sep 04 Posts: 103 Credit: 38,543 RAC: 0 |

it appears they are filling up the outbound queue so that when they decide to turn on the scheduler we should have enough WU's to go around. The server will be flooded for the first few hours, but then things should start leveling off. Keep your eyes on LHC. I think they will be sending out WU's very soon. Jim |

The Gas Giant The Gas GiantSend message Joined: 2 Sep 04 Posts: 309 Credit: 715,258 RAC: 0 |

Either; 1. They forgot to switch the upload/download server on, or 2. They want to do a speed and load test so are loading up the wu's. Oh, and I love the communication level! If Chrulle had said they would load up the wu's and then let the them go, I wouldn't have wasted my time looking every 30 minutes to see if work was being downloaded. Ho hum, another project that treats it's VOLUNTEERS like they are a commodity - but then again we are. Live long and crunch. Paul. |

|

Send message Joined: 13 Jul 05 Posts: 456 Credit: 75,142 RAC: 0 |

No, a project that does not expect its users to micromanange. It gives us all time to get our clients asking for work, the clients will ask repeatedly (according to the backoff algorithm), and pretty soon now the work will be coming. There is absolutely no need to sit over the computter doing a manual check every 30mins. The project expects us to treat our boxes as a commodity, and expects us to leave our boxes on autopilot to pick up the work as soon as it is available. The server is now up to Server Status so the tension is rising... edit- added: You will know work is being issued when you start to see a number of WU in progress. Those first WU will go to people whose machines are already asking for work, and in a random order determined by the backoff algorithm. That seems to me to be a fairer lottery than people getting work fast simply because they look at the home page every 3 minutes. I've got nine boxes now asking for LHC work, deferred periods ranghing from 3hrs down to 5 mins. I am happy about that - it means that I will get work reasonably soon whenever it comes on, instead of it being a lottery about who sees the front page first we can all get our boxes asking then the work is spread out fairly during the first 3hrs of it being issued. R~~

|

Paul D. Buck Paul D. BuckSend message Joined: 2 Sep 04 Posts: 545 Credit: 148,912 RAC: 0 |

Sadly, those of us that detached to limit log growth cannot attach and sit in the pending queue ... Since it is Saturday, we may not see the scheduler turned on until Monday ... :( Oh well, I have to drain some work so I can re-allocate my percentages while LHC has work ... |

Paul D. Buck Paul D. BuckSend message Joined: 2 Sep 04 Posts: 545 Credit: 148,912 RAC: 0 |

Sadly, those of us that detached to limit log growth cannot attach and sit in the pending queue ... Since it is Saturday, we may not see the scheduler turned on until Monday ... :( Oh well, I have to drain some work so I can re-allocate my percentages while LHC has work ... |

ksba ksbaSend message Joined: 27 Sep 04 Posts: 40 Credit: 1,742,415 RAC: 0 |

Since it is Saturday, we may not see the scheduler turned on until Monday ... :( Perhaps there is a Web-Admin-Interface .. We can only hope it. I hope it. |

|

Send message Joined: 25 Jul 05 Posts: 7 Credit: 200,424 RAC: 0 |

I just really think that it's bad style to not communicate with us at all for such a long time. Then they ramp up the WUs but there's still no word from the DEVs. If they had time to create new WUs they also had the time to tell us what's going on. :( |

|

Send message Joined: 18 Sep 04 Posts: 163 Credit: 1,682,370 RAC: 0 |

I just really think that it's bad style to not communicate with us at all for such a long time. Then they ramp up the WUs but there's still no word from the DEVs. If they had time to create new WUs they also had the time to tell us what's going on. Don't be too harsh. Some posts indicate that the admins are not the ones who generate the work. And at least Ben and Chrulle are working on Malaria Control too. Besides, it was clear from the beginning that LHC would not provide a consistent stream of work. Michael PS: This seems to be a new all time record: Up, 285600 workunits to crunch no work in progress 31 concurrent connections Team Linux Users Everywhere

|

Ben Segal Ben SegalSend message Joined: 1 Sep 04 Posts: 143 Credit: 2,579 RAC: 0 |

Michael is quite correct: the work is generated by accelerator engineers who don't always warn the admins (or the LHC@home coordinator) what/when they are about to submit. That's what has happened this time. Chrulle is now alerted and will unblock the scheduler when he can (it's Saturday afternoon here ...). We will give out information on the home page when we know more details ourselves. In the meantime, Happy New Year to all our crunchers. Ben Segal ________________________________________________________________________________________________ I just really think that it's bad style to not communicate with us at all for such a long time. Then they ramp up the WUs but there's still no word from the DEVs. If they had time to create new WUs they also had the time to tell us what's going on. |

|

Send message Joined: 22 Oct 04 Posts: 39 Credit: 46,748 RAC: 0 |

Thank you, Ben. We await patiently for the unlocking.

|

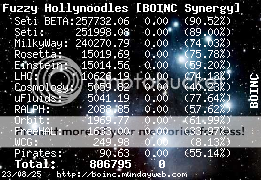

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 13 Jul 05 Posts: 51 Credit: 10,626 RAC: 0 |

Michael is quite correct: the work is generated by accelerator engineers who don't always warn the admins (or the LHC@home coordinator) what/when they are about to submit. And Happy New Year to you too. :-) Yes, we're all waiting anxiously for the new WU's. "I'm trying to maintain a shred of dignity in this world" - Me

|

|

Send message Joined: 12 Dec 05 Posts: 1 Credit: 119 RAC: 0 |

Michael is quite correct: the work is generated by accelerator engineers who don't always warn the admins (or the LHC@home coordinator) what/when they are about to submit. How about inviting those guys into this board, to show them what turmoil is caused by their lack of communication-culture? ;-) sincerely L. |

|

Send message Joined: 13 Jul 05 Posts: 456 Credit: 75,142 RAC: 0 |

Sadly, those of us that detached to limit log growth cannot attach and sit in the pending queue ... not for months on end, but can't you let the log grow over the weekend and prune it every 24hrs or so? That seems better than trying to babysit the boxes every half hour.

|

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 13 Jul 05 Posts: 51 Credit: 10,626 RAC: 0 |

Sadly, those of us that detached to limit log growth cannot attach and sit in the pending queue ... I agree with you River~~, when you know it's a question of time, then let the log grow. Or you can exit BOINC and open it again from time to time. Then the log is cleared. "I'm trying to maintain a shred of dignity in this world" - Me

|

|

Send message Joined: 13 Jul 05 Posts: 456 Credit: 75,142 RAC: 0 |

Sadly, those of us that detached to limit log growth cannot attach and sit in the pending queue For future reference, setting 'No new work' seems as effective as detaching in terms of keeping the log short and in terms of proeject shares, and it is easier to reverse. Added to which with a lot of boxes, it is easy to turn No New Work into Allow New Work on all of them at once with a couple of mouse clicks in BoincView than it is to re-attach a lot of boxes to a project wehen the server is inundated. R~~ |

Paul D. Buck Paul D. BuckSend message Joined: 2 Sep 04 Posts: 545 Credit: 148,912 RAC: 0 |

Since I can add them back with a similar few mouse clicks, it does not seem to be that disadvantageous to just disconnect them. Also, when you are tracking resource share percentages as I do, the presence of inactive projects messes up my addition ... So, no big deal either way ... As far as log trimming, where do you think I got a lot of the messages for the Wiki? I let the logs run and collect them in their entirety ... and having dead projects in there just makes the logs longer for no gain. Anyway, my operational choice and it is not a big deal ... when they allow work, I can attach again ... |

|

Send message Joined: 29 Aug 05 Posts: 42 Credit: 27,102 RAC: 0 |

How about inviting those guys into this board, to show them what turmoil is caused by their lack of communication-culture? ;-) 1) The accelerator engineers have no investment in this forum as far as PR goes. I sincerely doubt they care a great deal that you're as ramped up as a three year old on birthday cake about the work. 2) You're as ramped up as a three year old on birthday cake about the work available. We've been stoic and patient this far people. Let's have a deep breath here, eh? (j) James |

|

Send message Joined: 27 Sep 04 Posts: 5 Credit: 1,599,533 RAC: 970 |

WUs are now available!! I just got 2!!!! |

©2026 CERN