Message boards : Number crunching : Running 2 WU's simultaneously with one processor after benchmark..

Message board moderation

| Author | Message |

|---|---|

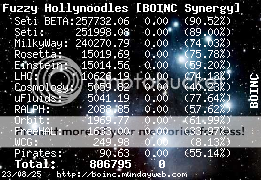

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 13 Jul 05 Posts: 51 Credit: 10,626 RAC: 0 |

I was checking the Work tab in my BOINC Manager and saw that two WU's, an Einstein and a LHC, were running at the same time. I don't have a HT processor! 18-07-2005 15:58:29||Starting BOINC client version 4.45 for windows_intelx86 18-07-2005 15:58:29||Data directory: C:\Programmer\BOINC 18-07-2005 15:58:30|Einstein@Home|Computer ID: 56003; location: home; project prefs: home 18-07-2005 15:58:30|LHC@home|Computer ID: 38657; location: home; project prefs: home 18-07-2005 15:58:30|orbit@home|Computer ID: 245; location: home; project prefs: default 18-07-2005 15:58:30|SETI@home|Computer ID: 1138836; location: home; project prefs: home 18-07-2005 15:58:30|SETI@home Beta Test|Computer ID: 470; location: home; project prefs: default 18-07-2005 15:58:30||General prefs: from SETI@home (last modified 2005-07-04 10:43:55) 18-07-2005 15:58:30||General prefs: using separate prefs for home 18-07-2005 15:58:32||Remote control not allowed; using loopback address 18-07-2005 15:58:32|Einstein@Home|Deferring computation for result l1_0672.0__0672.2_0.1_T10_S4lA_2 18-07-2005 15:58:32|LHC@home|Resuming computation for result wjun1B_v6s4hvnom_mqx__15__64.289_59.299__6_8__6__25_1_sixvf_boinc21307_3 using sixtrack version 4.67 18-07-2005 15:58:34|orbit@home|Deferring communication with project for 28 minutes and 10 seconds 18-07-2005 15:58:40|SETI@home|Started upload of 07fe05aa.532.5536.828400.7_3_0 .... 18-07-2005 23:50:08|Einstein@Home|Pausing result l1_0672.0__0672.2_0.1_T10_S4lA_2 (removed from memory) 18-07-2005 23:50:08|LHC@home|Restarting result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 using sixtrack version 4.67 18-07-2005 23:50:09||request_reschedule_cpus: process exited 19-07-2005 00:52:52||Using earliest-deadline-first scheduling because computer is overcommitted. 19-07-2005 01:23:55||Suspending computation and network activity - running CPU benchmarks 19-07-2005 01:23:55|LHC@home|Pausing result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 (removed from memory) 19-07-2005 01:23:55||request_reschedule_cpus: process exited 19-07-2005 01:23:57||Running CPU benchmarks 19-07-2005 01:24:54||Benchmark results: 19-07-2005 01:24:54|| Number of CPUs: 1 19-07-2005 01:24:54|| 1387 double precision MIPS (Whetstone) per CPU 19-07-2005 01:24:54|| 2782 integer MIPS (Dhrystone) per CPU 19-07-2005 01:24:54||Finished CPU benchmarks 19-07-2005 01:24:54||Resuming computation and network activity 19-07-2005 01:24:54||request_reschedule_cpus: Resuming activities 19-07-2005 01:24:54|Einstein@Home|Restarting result l1_0672.0__0672.2_0.1_T10_S4lA_2 using einstein version 4.79 19-07-2005 01:24:54|LHC@home|Restarting result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 using sixtrack version 4.67 19-07-2005 02:23:33|SETI@home|Started upload of 07fe05aa.532.5536.828400.7_3_0 19-07-2005 02:24:24|SETI@home|Temporarily failed upload of 07fe05aa.532.5536.828400.7_3_0: -106 19-07-2005 02:24:24|SETI@home|Backing off 1 hours, 13 minutes, and 40 seconds on upload of file 07fe05aa.532.5536.828400.7_3_0 19-07-2005 02:24:54|LHC@home|Pausing result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 (removed from memory) 19-07-2005 02:24:56||request_reschedule_cpus: process exited Can someone tell me if they are both corrupted now? "I'm trying to maintain a shred of dignity in this world" - Me

|

|

Send message Joined: 2 Sep 04 Posts: 71 Credit: 8,657 RAC: 0 |

> Can someone tell me if they are both corrupted now? They shouldn't be. This is an elusive BOINC issue that crops up from time to time. The only harm done should be slower processing due to the science apps fighting for CPU cycles. |

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 13 Jul 05 Posts: 51 Credit: 10,626 RAC: 0 |

> > Can someone tell me if they are both corrupted now? > > They shouldn't be. This is an elusive BOINC issue that crops up from time to > time. The only harm done should be slower processing due to the science apps > fighting for CPU cycles. > > Thanks! Phew!!! :-) "I'm trying to maintain a shred of dignity in this world" - Me

|

|

Send message Joined: 23 Oct 04 Posts: 8 Credit: 1,751,242 RAC: 1,862 |

It happened also to me. Results were OK, CPU time was just divided for both applications, so it took longer time to complete WUs. I restarted BOINC and everything was normal again afterwards. |

Jim Baize Jim BaizeSend message Joined: 17 Sep 04 Posts: 103 Credit: 38,543 RAC: 0 |

Is this a self-correcting issue? Meaning, after the 60 minutes of crunching both WU's does it then go back to crunching only 1 or does it continue to cruch 2 at a time? Jim > It happened also to me. Results were OK, CPU time was just divided for both > applications, so it took longer time to complete WUs. I restarted BOINC and > everything was normal again afterwards. > |

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 13 Jul 05 Posts: 51 Credit: 10,626 RAC: 0 |

> Is this a self-correcting issue? Meaning, after the 60 minutes of crunching > both WU's does it then go back to crunching only 1 or does it continue to > cruch 2 at a time? > > Jim > > > It happened also to me. Results were OK, CPU time was just divided for > both > > applications, so it took longer time to complete WUs. I restarted BOINC > and > > everything was normal again afterwards. > > > Yes, it seemed to correct itself: 19-07-2005 01:23:55|LHC@home|Pausing result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 (removed from memory) 19-07-2005 01:23:55||request_reschedule_cpus: process exited 19-07-2005 01:23:57||Running CPU benchmarks 19-07-2005 01:24:54||Benchmark results: 19-07-2005 01:24:54|| Number of CPUs: 1 19-07-2005 01:24:54|| 1387 double precision MIPS (Whetstone) per CPU 19-07-2005 01:24:54|| 2782 integer MIPS (Dhrystone) per CPU 19-07-2005 01:24:54||Finished CPU benchmarks 19-07-2005 01:24:54||Resuming computation and network activity 19-07-2005 01:24:54||request_reschedule_cpus: Resuming activities 19-07-2005 01:24:54|Einstein@Home|Restarting result l1_0672.0__0672.2_0.1_T10_S4lA_2 using einstein version 4.79 19-07-2005 01:24:54|LHC@home|Restarting result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 using sixtrack version 4.67 19-07-2005 02:23:33|SETI@home|Started upload of 07fe05aa.532.5536.828400.7_3_0 19-07-2005 02:24:24|SETI@home|Temporarily failed upload of 07fe05aa.532.5536.828400.7_3_0: -106 19-07-2005 02:24:24|SETI@home|Backing off 1 hours, 13 minutes, and 40 seconds on upload of file 07fe05aa.532.5536.828400.7_3_0 19-07-2005 02:24:54|LHC@home|Pausing result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 (removed from memory) 19-07-2005 02:24:56||request_reschedule_cpus: process exited 19-07-2005 03:26:22||request_reschedule_cpus: process exited 19-07-2005 03:26:22|Einstein@Home|Computation for result l1_0672.0__0672.2_0.1_T10_S4lA_2 finished 19-07-2005 03:26:22||Resuming round-robin CPU scheduling. 19-07-2005 03:26:23|LHC@home|Restarting result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 using sixtrack version 4.67 19-07-2005 03:26:23|Einstein@Home|Started upload of l1_0672.0__0672.2_0.1_T10_S4lA_2_0 19-07-2005 03:26:42|Einstein@Home|Finished upload of l1_0672.0__0672.2_0.1_T10_S4lA_2_0 19-07-2005 03:26:42|Einstein@Home|Throughput 7362 bytes/sec 19-07-2005 03:26:43|Einstein@Home|Sending scheduler request to http://einstein.phys.uwm.edu/EinsteinAtHome_cgi/cgi 19-07-2005 03:26:43|Einstein@Home|Requesting 0 seconds of work, returning 1 results 19-07-2005 03:26:45|Einstein@Home|Scheduler request to http://einstein.phys.uwm.edu/EinsteinAtHome_cgi/cgi succeeded 19-07-2005 03:38:05|SETI@home|Started upload of 07fe05aa.532.5536.828400.7_3_0 19-07-2005 03:38:11|SETI@home|Temporarily failed upload of 07fe05aa.532.5536.828400.7_3_0: -106 19-07-2005 03:38:11|SETI@home|Backing off 2 hours, 38 minutes, and 2 seconds on upload of file 07fe05aa.532.5536.828400.7_3_0 19-07-2005 03:42:49||request_reschedule_cpus: process exited 19-07-2005 03:42:49|LHC@home|Computation for result wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1 finished 19-07-2005 03:42:49|LHC@home|Starting result wjun1B_v6s4hvnom_mqx__17__64.274_59.284__6_8__6__70_1_sixvf_boinc23152_3 using sixtrack version 4.67 19-07-2005 03:42:49|LHC@home|Started upload of wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1_0 19-07-2005 03:42:56|LHC@home|Finished upload of wjun1B_v6s4hvnom_mqx__17__64.271_59.281__6_8__6__65_1_sixvf_boinc22947_1_0 19-07-2005 03:42:56|LHC@home|Throughput 7551 bytes/sec 19-07-2005 03:42:57|LHC@home|Sending scheduler request to http://lhcathome-sched1.cern.ch/scheduler/cgi 19-07-2005 03:42:57|LHC@home|Requesting 0 seconds of work, returning 1 results 19-07-2005 03:42:58|LHC@home|Scheduler request to http://lhcathome-sched1.cern.ch/scheduler/cgi succeeded It seemed that the failed upload to Seti got it back to normal! But funny, when I marked the LHC WU, which was stated as preempted, the Show Graphic button was still highlighted! "I'm trying to maintain a shred of dignity in this world" - Me

|

|

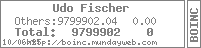

Send message Joined: 14 Jul 05 Posts: 3 Credit: 562,479 RAC: 0 |

This is the 'normal' behaviour if you have more than one project. At Switch time all activity is suspended and the next project gets the processor. If you have 'even weighted' projects (i.e. each project with '100') then Boinc will switch the projects every hour. Udo  |

©2026 CERN